10 Database Management Tips and Tricks From a Data Architect

I am old. Well, maybe not in earthly years, but definitely in technology years. I remember when we would run applications on mainframe hardware, what Unix really is (or was), countless hours in extremely refrigerated data centers as part of my database management tasks, helping customers edit configuration files over the phone with “vi,” and how difficult it was to help customers run production-level software applications.

In some ways, life for technologists has changed dramatically in my professional journey. As an aging technologist (and currently a data architect), I would have never envisioned the compute power, storage capacity, and network throughput available at our fingertips today.

Yet, in other ways, some things always stay the same. To run production-level applications twenty-four-seven, database developers need to consider (and address) a series of requirements that are still the same today as they were 30 years ago.

So, let me recap 10 of the lessons learned over the last three decades that allowed me to hone my database management skills.

What is database management?

Database management is the process involving the proper organization, storage, retrieval, and protection of data in a database. It is an essential aspect of information technology that ensures data integrity, accuracy, and security.

In technical terms, database management involves using software known as a Database Management System (DBMS). This system provides an interface for users to interact with databases, enabling them to create, read, update, and delete data efficiently.

The primary benefits of database management include standardized data access, improved data security, efficient data integration, and enhanced data analysis capabilities. Effective database management can lead to better decision-making, operational efficiency, and overall business growth.

What are Database Management Systems?

Database Management Systems (DBMS) serve as an interface between users and databases, playing a pivotal role in efficiently storing, managing, and retrieving data. They provide a structured environment to handle vast amounts of data, ensuring data integrity, security, and accessibility.

Types of DBMS

DBMSs are categorized into several types, each with its unique characteristics and use cases:

- Relational Database Management System (RDBMS): RDBMS is the most common type of DBMS, which organizes data into tables. Each table has rows (records) and columns (fields). The relational model's strength lies in its simplicity and the ability to manage large amounts of data efficiently. Examples include PostgreSQL, MySQL, and Oracle Database.

- Object-Oriented Database Management System (OODBMS): OODBMS stores data in the form of objects, similar to object-oriented programming. It allows for more complex data models and can provide significant performance improvements for certain workloads. An example of an OODBMS is MongoDB.

- Hierarchical Database Management System (HDBMS): In an HDBMS, data is organized in a tree-like structure, with a single root to which all other data is linked. This structure is highly efficient for certain types of queries, such as those with a known 'path'. IBM's Information Management System (IMS) is an example of an HDBMS.

- Network Database Management System (NDBMS): NDBMS allows each record to have multiple parent and child records, forming a web-like structure. This type is less common today but was used extensively in the past. Integrated Data Store (IDS) is an example of a network DBMS.

- Time-series Database Management System (TSDBMS): TSDBMS are optimized for handling time-series data, i.e., data that is indexed by time. They are often used in analytics, IoT applications, and monitoring systems. Timescale, built on top of PostgreSQL (a relational database), is an example of a TSDBMS. It combines the reliability and flexibility of PostgreSQL with powerful time-series data management capabilities, providing scalability, robustness, and performance optimization features.

Often, developers in our community ask us for advice, wondering if they should invest in a managed database service or build their self-hosted deployment. Our most likely answer is, “It depends” (as with many things in the database world). But, when evaluating both options, try to consider the big picture of everything that goes behind hosting and managing a database in production.

Database Management Practices

Managed databases have made the process of running applications in production considerably simple for the developer. So much so, in fact, that sometimes we tend to overlook all the work behind it. And remember, this database management workload is something you should plan for if you’re considering hosting your database in production.

Let’s take a closer look at our own database platform, Timescale. Timescale is a managed cloud-native database platform that supercharges PostgreSQL for time series. The result? Fast queries at scale, cost-efficient scaling options to store higher data volumes for less money, and plenty of time-series functionality to save development time.

But Timescale is much more than just an optimized time-series database: it gives customers access to an entire database environment where they can sign up and have a database running in a matter of seconds.

Timescale also automatically takes care of many database operations (such as backups and failover or upgrades), and it greatly simplifies others, like creating copies of your database for testing, enabling high availability via replication, or creating read replicas for load reduction.

To create such a seamless experience, managed databases juggle many elements behind the scenes, including physical facilities, compute hardware, virtualization, an operating system, and the database itself.

I’ve spent plenty of time addressing those things myself throughout my career. Let’s take a journey through time and look at what it entails to provide and manage each of these building blocks effectively.

Hosting Databases in Production: Building Blocks

Facilities

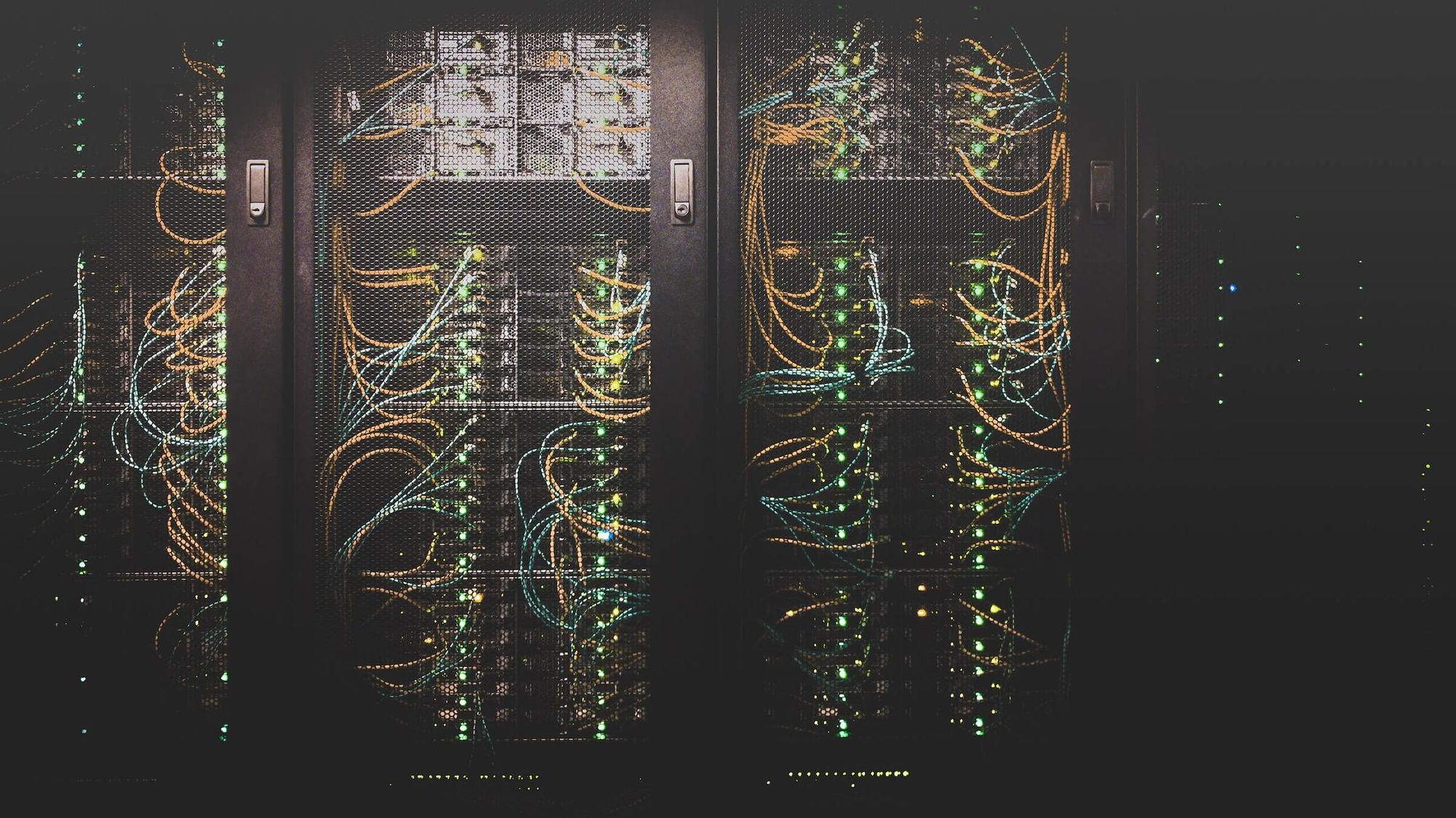

For most customers, the amount of space needed to run a database is rather insignificant. It is everything else around the actual footprint that is difficult to provide for twenty-four-seven applications. In one of my previous jobs, I assisted customers in installing appliance hardware. I spent countless hours in surprisingly cold data centers assisting customers with installation services. Spending time in an actual data center highlights elements needed for proper facilities: cooling, uninterrupted power, and physical security.

Hardware

Dealing with hardware is now a pastime for most young technologists in the software industry. Long gone are the days when we had first to figure out our facilities and then hardware. This always entailed numerous problems for companies.

For example, by its very nature, computer hardware is, at best, a depreciating asset. When we used to have to procure and install our own hardware, it was essentially obsolete on the day of installation. Rarely is there a physical item on this Earth with such a short shelf life! I remember the days spent purchasing, physically installing, running cables, and manually updating BIOSes… To replace it soon thereafter.

Today, we can use a cloud service (AWS, for example) to allocate compute resources dynamically. This has greatly simplified the task of deploying the hardware needed to run applications, making things smoother for developers and companies. In Timescale, we’re actually running Timescale in AWS ourselves, taking advantage of the flexibility of this cloud ecosystem to build our platform.

What we learned from our own experience is that having access to a cloud service is just the tip of the iceberg when dealing with hardware. One can hastily miscalculate the effort that goes into it. Sure, it is pretty straightforward to instantiate an Amazon EC2 instance. But do you have the time and flexibility to provide an easy front end to instantiate and change resources on the fly? Can you monitor those resources for consistent uptime and performance? And ultimately, can you automatically adjust when the resources are not performing as one expects twenty-four by seven? If reading this paragraph didn’t exhaust you, maybe you can.

Deploying your hardware in the cloud doesn’t necessarily simplify many critical database management tasks you will need to perform daily if you’re running a database in production. This aspect often confuses our users, who expect these tasks to be less time-consuming, especially if they’re used to working with managed database services.

So while a cloud service costs a bit, it is nothing compared to the costs if a customer were to build that functionality for themselves. I am happy to no longer deal with the entire facilities and hardware pieces of the puzzle.

Virtual Management

Speaking of operating a cloud infrastructure, let’s talk about virtualization.

Virtualization was introduced about midway through my career in technology. I remember quite well how it made certain tasks much easier. For example, I could virtualize the hardware in a multi-node software application, so I didn’t need a full hardware rack but rather a much smaller footprint. It saved physical space and time.

But it came at a cost since nothing is free. There is now a layer of complexity that, if not properly managed, can greatly impact all the layers above, including how the operating systems (O/S) and applications run. Too often, I’ve seen virtualization misused (e.g., purposely overprovisioned), negatively affecting everything else that runs above the virtualization layer.

However, virtualization has come a long way in a few short years to where it now allows us to run entire data centers as virtualization centers.

O/S

Operating systems are funny things. They are not seen yet are crucial to our lives. Even our phones now have an operating system. I’ve seen many different “server” operating systems in my life, from IBM OS/360 to VAX/VMS to just about every Unix variant (SunOS, Solaris, System V), and now Linux and its many distributions. Not many people think about operating systems except when things go bad.

But operating systems are another thing that needs care and feeding, so they need to be on someone’s mind. Benjamin Franklin’s quote that the only thing certain in life is death and taxes needs to include software and security patches. If you’re thinking about self-hosting your own database, this is another aspect to consider: make sure to remember that you’ll need to monitor and maintain your O/S, running upgrades every time it’s necessary to patch a security vulnerability or an important bug.

Database

We now come to the last component—the database itself. Timescale is an extension of Postgres. Just like an O/S, the database needs care and feeding. Timescale takes care of database maintenance like every other layer before, including bug and security patches.

But database management in production involves another crucial element: preparing for when things go wrong. That is the only certainty of the production process—unfortunately, things will go wrong sometimes, and you need a plan.

If you’re self-hosting your database, you must define a set of operative rules to determine what to do when your storage corrupts, or your compute fails. At a minimum, you must take backups regularly, testing them to ensure your data is safe.

But take into account that recovery from a backup can be a rather slow process. You may want to consider setting up an alternative method (e.g., replication) that avoids the potential downtime due to a database failure. Designing for less downtime in production is often a vital element to consider as, more often than not, having your database down will cause the whole business to collapse. Besides the money loss, this is a terrible experience for your end-users.

Self-Hosting or Managed?

Reminiscing on the past helps to understand all of the behind-the-scenes elements that are at play when managing a production database.

- By choosing to run on a managed platform like Timescale, you don’t have to worry about the cost and hassles of managing facilities and hardware provisioning. You can deploy databases in one click and scale them as you need. Once you don’t need them anymore, you can delete them and not think about the underlying hardware that powered them ever again.

- Timescale makes the most of virtualization technology to provide properly allocated resources to end-users. Timescale customers don’t need to worry about instances since Timescale does not overprovision compute resources. In addition, the service carefully monitors every virtual machine to ensure each customer has the requested resources.

- Timescale takes care of maintaining and monitoring the O/S. Timescale is constantly monitoring to ensure the O/S is running correctly, not to mention the never-ending bug and security patches. As an administrator, I’d be happy never to maintain a server O/S again, which is exactly what Timescale provides.

- Talking about things I’d be happy never to do again: worrying about backups. Timescale automatically keeps up-to-date backups by performing full backups weekly and partial backups daily. We stressed about backups because we weren’t good at consistently performing them, let alone testing them to ensure they were ready if they were ever to be used.

- Besides backups, Timescale keeps Postgres write-ahead log (WAL) files of any changes made to the database. This ensures the recovery of a database at (and to) any point in time without experiencing data loss.

- Finally, another huge advantage of managed databases (like Timescale) is that you can easily enable database replication for high availability. This means that when things go south and the production database goes down, another instance automatically spins up in seconds instead of leaving your users down for what could turn into hours. Nobody wants to be called to solve a complicated mess in the middle of the night.

But that’s not even it. The dynamic cloud-native architecture of Timescale allows us to provide our customers with many more services that simplify your daily tasks, so you can focus on your applications instead of operating your database.

For example, Timescale offers a single-button “fork” mechanism. This single-button action duplicates an entire environment at the push of a button (hardware resources, O/S, database, application code, and data).

Not so long ago, we had to duplicate the entire facilities-to-application stack manually. Even if we had the components readily available, it still took hours, if not days. Duplicating an entire environment with the click of a button is precisely what application engineers need to focus on critical tasks. They should spend their time writing and testing applications, not on the platform- or system-level tasks involved in creating and testing their applications.

And since it is so simple to fork the database, you can also delete that instance—so you will only pay for the resources needed for that task. In other words, one can go through an entire quality assurance cycle and spend only pennies.

Now, onto to my 10 tips.

10 Database Management Tips and Tricks

1. Normalize Your Data to Optimize Performance

As someone who has managed a lot of databases, I cannot overstate the importance of data normalization. This process is absolutely crucial for maintaining an efficient, reliable, and well-structured database. Data normalization is a methodology that involves organizing data in a database to reduce redundancy and improve data integrity.

By distributing data across different tables and establishing relationships between these tables, normalization ensures that each piece of data is stored only once. This reduces data redundancy, saving on storage space and increasing the efficiency of data retrieval operations.

Moreover, normalization enhances data integrity by enforcing consistency. When data is stored redundantly, there's a risk of inconsistency—if data is updated in one place but not another, it can lead to discrepancies. Normalization mitigates this risk by ensuring that each unique data point is stored only once, thereby promoting data accuracy and consistency.

2. Implement Regular Backups and Test Them

It's crucial to underline that taking regular database backups is not just a good practice but a necessity. But backing up your data is only half the battle; the other half is making sure those backups actually work when you need them. This is where backup testing comes into play.

The importance of testing your backups periodically cannot be overstated. Without verification, you may be under the false impression that your data is safe when, in fact, you could be facing potential data loss or corruption scenarios.

Backup testing ensures that the data recovery process will function correctly in case of a system failure or data loss incident. It identifies potential issues such as data corruption, incomplete backups, or problems with the backup software itself, allowing you to rectify these before disaster strikes.

The benefits of this approach are manifold. Not only does it provide peace of mind, but it also ensures business continuity, minimizes downtime, and protects against potential financial losses or reputational damage stemming from data loss.

With Timescale, you can save time with automatic backups, upgrades, and failover.

3. Monitor Database Performance Actively

Continuous monitoring is a fundamental aspect of maintaining an efficient and reliable database system. It allows us to proactively identify potential issues, optimize performance, and ensure the smooth operation of your database.

There are several tools and methods we can utilize to continuously monitor the performance of a database:

Performance metrics: These are key indicators of the health and efficiency of your database. They include things like query execution time, CPU usage, memory usage, and disk I/O. By regularly checking these metrics, we can identify any anomalies that may indicate a problem.

Query analysis: By examining the queries that are run against our database, we can identify inefficient operations that may be slowing down performance. Tools like SQL Profiler or pg_stat_statements (for PostgreSQL/Timescale) can provide valuable insights into query performance.

Database logs: Logs can provide a wealth of information about what's happening within our database. By analyzing these logs, we can identify errors, track changes, and gain insight into the overall operation of our system.

Automated monitoring tools: Tools such as Nagios, Zabbix, or Datadog can automatically track performance metrics and alert us to potential issues. These tools can be set up to monitor TimescaleDB just as effectively as they do for traditional RDBMSs.

Load testing: This method simulates high-load scenarios to see how the database responds. It can help identify bottlenecks and areas that need optimization.

See how you can test performance improvements on up-to-date production data with Timescale.

4. Secure Your Database Against Unauthorized Access

In today's digital age, where data breaches are all too common, implementing robust security measures is not just a good practice—it's an absolute necessity.

Firstly, user roles and permissions play a critical role in database security. By assigning specific roles and permissions to each user, you can control who has access to what data and what actions they can perform. This principle of least privilege—providing only the necessary access needed for a user to perform their tasks—helps minimize the potential damage from accidental or malicious actions.

Timescale has a robust role-based access control. This allows for finely tuned access controls, ensuring that users can only access and manipulate the data they need to perform their job. Plus, we are SOC2 Type II and GDPR compliant.

Secondly, it's crucial to stay up-to-date with security patches. Software providers release these patches to fix known vulnerabilities that malicious actors could exploit.

As a user of Timescale, you don’t have to worry about Timescale upgrades or patches: they will be handled automatically by the platform during a maintenance window picked by you. These upgrades are backward compatible and nothing you should worry about. They require no downtime.

5. Plan for Scalability From the Outset

Planning for future growth requires considering factors like increased user load, larger datasets, and integration with other systems. Here's how you can do it:

Scalability: Scalability is the ability of a system to handle increased load without impacting performance. For databases, this means being able to handle more queries, larger datasets, and more users.

Data Partitioning: Partitioning splits your data into smaller, more manageable pieces, allowing for improved query performance and easier maintenance.

Indexing: Proper indexing can significantly improve query performance, especially as your database grows.

Integration: As your system grows, it may need to integrate with other systems. Designing your database with this in mind from the start can save you a lot of headaches down the line.

Data archiving and retention: Over time, your database will accumulate large amounts of data. Implementing data archiving strategies and setting up retention policies will help manage this data growth.

Monitoring and performance tuning: Regular monitoring and tuning of your database can help identify potential bottlenecks and optimize performance.

6. Optimize Queries for Efficiency

Inefficient queries can significantly impact a database's performance, increasing I/O load, CPU usage, and blocking. These poorly optimized queries can be the root cause of many web application bottlenecks, often performing a large number of reads but returning a relatively small number of rows. This inefficiency can lead to slower response times and diminished user experience.

To improve the performance of your database, consider the following strategies:

Optimize your queries: In many cases, database performance issues are caused by inefficient SQL queries. Avoid using SELECT * statements as they can slow down your system by retrieving unnecessary data. Instead, specify the exact columns you need. Also, avoid nested queries and use joins where possible for more efficient execution.

Add missing indexes: Table indexes in databases help retrieve information faster and more efficiently. Without proper indexing, the database server must scan the entire table to retrieve the desired data, which can be very time-consuming with large datasets.

Use appropriate data types: Using improper data types can lead to unnecessary space usage and slower query performance. Always choose the most appropriate data type for your data to ensure efficiency.

Regular maintenance: Regular database maintenance activities like updating statistics, rebuilding indexes, and removing old data can help maintain optimal performance.

Monitor Query Performance: Keep an eye on your query performance over time. Tools like pg_stat_statements can provide valuable insights into query performance and help identify inefficient queries that need optimization7.

7. Regularly Update and Patch Your DBMS

As I mentioned, ensuring your DBMS is consistently updated is a crucial part of maintaining a robust, secure, and efficient database environment.

Bug fixes: Updates often include fixes for bugs identified in previous versions of the software. These bugs can range from minor usability issues to significant problems that can impact the system's performance or functionality. By updating your DBMS regularly, you ensure that these issues are resolved promptly, allowing your database to operate smoothly and efficiently.

Security patches: Security is a paramount concern in any database system. Updates often include security patches that address vulnerabilities identified in the software. These patches are essential for protecting your data from potential threats and breaches. Regular updates help fortify your database's security, safeguarding your valuable data.

New features: Each update potentially brings new features and enhancements to the existing functionality. These improvements can provide more efficient ways to manage and manipulate your data, improve query performance, or introduce new capabilities that can enhance your database's overall utility.

Performance improvements: Updates often include optimizations to improve the DBMS's performance. These could be enhancements to the query processing engine, better memory management, or more efficient algorithms for handling data. By keeping your DBMS updated, you can benefit from these performance enhancements, ensuring your database operates at its optimal speed and efficiency.

8. Maintain Comprehensive Documentation

Customers often praise our Docs, and we take great pride in them. Having detailed documentation for your database schema, stored procedures, triggers, and other elements is crucial. This practice is not just a good-to-have—it's a necessity for robust, efficient, and maintainable database management.

9. Employ Data Archiving Strategies

Archiving older data is an effective strategy to improve the performance of your database management system. It involves moving infrequently accessed or non-essential data from the primary database to a secondary storage system, which can lead to several performance benefits.

To effectively archive data without loss, consider the following strategies:

Define archiving policies: Establish clear policies on what data should be archived, when, and how. This could be based on the age of the data, its relevance to current operations, or legal requirements.

Use data partitioning: In Timescale, you can use automatic partitioning management features to segregate older data easily.

Ensure data accessibility: Even though data is archived, it may still need to be accessed occasionally. Ensure your archiving solution allows for easy data retrieval when necessary (like Timescale with S3!).

Data validation: Before and after archiving data, validate it to ensure no data has been lost during the process. This can be done by comparing record counts or using data validation tools.

Regularly review archiving strategy: As business needs change, so too should your archiving strategy. Regularly review and update your strategy to ensure it continues to meet your organization's needs.

Timescale allows you to tier data to Amazon S3: By running a simple command on your hypertable (add_tiering_policy), you can automatically tier older data to a low-cost, infinite storage layer built on Amazon S3. Yet the data still remains fully queryable from within your database, and this tiering is transparent to your application.

10. Leverage Database Caching Mechanisms

Understanding caching

In essence, caching involves storing frequently accessed data in a high-speed access area—known as a cache—for quick retrieval. When a database query is executed, the system first checks the cache. If the requested data is found (a cache hit), it's returned immediately, bypassing the need for time-consuming disk reads.

The impact of caching

The benefits of caching are quite substantial:

Faster data retrieval: Cache memory is faster than disk storage. Hence, retrieving data from the cache significantly reduces response times, speeding up database operations.

Reduced disk I/O: By serving data from the cache, you minimize disk I/O operations, reducing wear on your storage devices and freeing them up for other tasks.

Improved system performance: The cumulative effect of faster data retrieval and reduced disk I/O leads to the improved overall performance of your database system.

Implementing effective caching strategies

To leverage caching effectively, consider the following strategies:

Identify frequently accessed data: Analyze your workloads to identify data that are accessed often. This could be data used in popular queries or reports. Prioritize this data for caching.

Use appropriate cache size: The cache size should be large enough to store frequently accessed data but not so large that it consumes excessive system resources. In Timescale, you can configure shared_buffers to determine the amount of memory dedicated to the cache.

Monitor and adjust: Regularly monitor your cache's hit ratio (the percentage of total requests served by the cache). A low hit ratio may indicate that your cache size is too small or that the wrong data is being cached. Adjust your caching strategy based on these insights.

The Future Is Cloud-First

I guess I am old in a way. But going through these experiences helped me understand the great value of Timescale. When I work with customers, I no longer need to freeze in a data center, helping a customer install yet another rack-mounted piece of hardware. Timescale customers no longer need to buy hardware that essentially becomes obsolete the day it is bought and installed.

Long gone are the days of waiting for new hardware to increase the capacity of a system since virtualization allows for dynamic resource allocation. And can I say how much I don’t miss keeping up on O/S and security patches?

Lastly, what I hope Timescale customers will appreciate, just as much as everything else, is the expertise available to help them from a worldwide Support team. I started my career in technology as a support engineer. This was well before the modern support mentality—we actually spoke with customers either on the phone or via personal email interaction.

Timescale Support is fully staffed, offering the same high-touch support. They’re ready to help on a myriad of topics, such as data migration, schema design, data modeling, query or ingest performance, compression settings, and more, providing in-depth consultative support at no additional charge.

We often forget all that goes into providing a twenty-four-seven database platform. My hope is Timescale customers occasionally take a moment to think about our technology journey and appreciate that they can concentrate on their applications and don’t need to worry about how Timescale has learned from the past to provide a database platform for the future.

Keep reading to learn how to save money and improve performance by carefully managing your time-series data.

And, if you are starting to understand the appeal of managed databases, embark on this journey and start prioritizing your applications: sign up for Timescale. It is free for 30 days, no credit card required.