How OpenSauced Is Building a Copilot for Git History With pgvector and Timescale

This is an installment of our “Community Member Spotlight” series, in which we invite our customers to share their work, spotlight their success, and inspire others with new ways to use technology to solve problems.

Hello! My name is John McBride, and I'm a Sr. Software Engineer, Head of Infrastructure at OpenSauced, a centralized hub for gaining actionable insights on open source projects and their contributors.

OpenSauced was founded in 2021 to address a key pain point that Bdougie, our founder, identified during his time at GitHub. In his role there, he met with top open-source maintainers to understand what features were missing from GitHub's offerings. Bdougie's conviction to solve this problem was so strong that he left his position at GitHub, with their investment, to start a team dedicated to tackling this challenge.

I'm very excited to bring you this guest blog post on how we use Timescale at OpenSauced to provide insights and metrics on open-source projects at GitHub's massive scale. I hope this illuminates some of the power and capabilities that Timescale has unlocked for our small team and how it could work for you too! Cheers!

There are a lot of GitHub repositories out there.

GitHub estimates that there are over 420+ million repositories and 100+ million developers on the platform. That's a lot of stuff going on with near-constant events always happening! OpenSauced provides close to real-time metrics and insights into all that data and all those events happening on GitHub. With millions and millions of events flowing through our data pipelines, how have we accomplished building our platform and data infrastructure at GitHub’s scale?

One of the most important technologies that powers how OpenSauced ingests and integrates GitHub events data is Timescale. Through its powerful time-series mechanisms, we can integrate tens of thousands of new real-time GitHub events every hour and provide deep insights and metrics to our end users based on that data. Timescale gives us high-scale ingestion, fast serving of time-series data, and excellent reliability.

In this blog, we'll explore why OpenSauced chose Timescale and how it integrates with the OpenSauced platform.

Why Timescale?

One big reason we chose Timescale was the initial promising performance results: knowing that we'd need to store and ingest massive amounts of time-series data, we spun up several different time-series databases that support ingesting this type of data. And we devised an experiment that would test the limits of these stacks. We wanted to see what would be performant out of the box with little fussing around. Because we're a small team, operational wins go a very long way!

During our experiments in the evaluation phase, we considered only managed services from AWS Timestream, InfluxDB, Timescale, cloud-managed Prometheus, TiDB, and cloud-managed Apache Cassandra.

Out of the box during our initial experiments, Timescale provided excellent performance compared to the other time-series databases when ingesting and serving our time-series data. Here’s how we ran our experiments: we had a simple runner that would read JSON files of GitHub events and attempt to insert those into a database.

“Since we are still growing, the fewer technologies we have within our stack is a big win operationally. Being able to take advantage of a powerful time-series store right within Postgres was a huge plus.”

Over the course of about 30 hours, Timescale was able to ingest over 10 years of GitHub events data, which was over 10 TB, without dropping anything! Doing some quick back-of-the-napkin math, Timescale was able to ingest this data at about a GB every 10 seconds! We also ran some more intense queries against the ingested data and found that “time_bucket” queries against hypertables would return in under 300 ms for months of data after applying some indexes. This was similar, if not better, performance compared to the other, more specialized time-series databases.

Another big reason we chose Timescale is because of our small engineering team's existing familiarity and expertise with PostgreSQL: since we are still growing, the fewer technologies we have within our stack is a big win operationally. Being able to take advantage of a powerful time-series store right within Postgres was a huge plus. This way, we can continue to leverage what we already know without having to spend precious time learning yet another database technology and its query language while still getting flagship class performance.

Finally, we found the Timescale pricing model to be very appealing. Because we planned to ingest a continuous huge amount of data, we knew that ingress costs could quickly add up. Thankfully, Timescale’s pricing model is you “only pay for compute and the storage you use.” So, when using Timescale, we don’t have to worry about how much data we’re ingesting and could target scaling to GitHub’s size without having to engineer more efficient ingress!

This all made us very bullish on leveraging a known technology stack alongside a cost-effective managed service that would give us reliable and fast performance. And, in the end, after integrating with our production systems, we’ve found that the initial performance speeds and reliability we saw still hold very true in our production environments! We continue to ingest tens of thousands of GitHub events every day and still see excellent query performance.

So, how did we implement this data pipeline and integrate it with Timescale? Let's dive in!

The Events Firehose

GitHub surfaces an events’ "firehose": this is an HTTP endpoint that is constantly surfacing all events happening on GitHub in near real time. You can see for yourself! If you go to https://api.github.com/events you'll see a payload of JSON that represents a very small snippet of events happening on GitHub at that very moment.

If you reload, you'll get a different snapshot from that moment in time when you hit the API endpoint again. Everything, from issues, comments, pull requests, reviews (and much, much more), is surfaced through this interface, constantly flowing through an unending stream of data.

One of the key elements of these "events" is the "event_time" metadata field: this denotes when an event occurred on GitHub. Using these events, you can begin to build a very accurate picture of what's going on across the entire open-source GitHub ecosystem, all built up over a large slice of time.

For example, let's take a look at a pull request in the github.com/timescale/timescaledb open-source repository on GitHub: when a pull request is opened, it comes through the events firehose with a bunch of metadata, including the "event_time," who opened the pull request, the "action" that was taken, the content of the pull request, etc.

For example, in this pull request, Sven Klemm fixes the way "column = false" works in Timescale's compression mechanism. This event first comes through the events feed as "opened" at approximately "2024-04-29 13:08:00+00". Later that day, when the pull request is reviewed and merged, it comes through the events feed again, but this time, as "closed," with a "merged_at" timestamp, and an “event_time” timestamp for when the merge event happened.

Through all these events, put together to form the greater whole, you can start to understand how you might build a picture of different "heartbeats" into projects, communities, and ecosystems that make up OpenSauced's insights. Putting it all together, these events from the GitHub firehose are how we build an accurate picture of what’s going on at any given time on GitHub.

The Data Nozzle

One of the biggest challenges in working with the GitHub events feed is ingesting data fast enough to capture an accurate picture across the entire ecosystem: it happens so fast that you need a data pipeline that can read the data from the firehose API and store it fast enough in order to move onto the next chunk. It really is a "nozzle" that needs to consume the firehose without being "leaky" and losing data.

We call the mechanisms we built to do this "its-pizza-time": it's a Go micro-service that continuously polls the GitHub events feed, drinking from the firehose, does some minimal processing and validation of the data, and finally, sends it into Timescale in batches to be stored.

Obviously, when processing many thousands of events every minute, we need a time-series database that can handle that kind of ingestion rate and keep up: while the number of events flowing through the feed can vary dramatically (given the time of day/night, if it's a weekend or a holiday, etc.), we've experienced stellar performance from Timescale's ingestion and have yet to see any major loss of data.

Looking again at some "back of the napkin" math, in general, we easily process up to a million events every day. And looking at our Timescale metrics, we have consistently low storage I/O bandwidth: we can confidently say that Timescale doesn’t represent any kind of bottleneck in this ingestion system and is a bedrock of how this data pipeline works.

Landing Events in Timescale

To further improve performance, we batch upsert these events into separate Timescale tables, each denoted by their event type. For example, we have an "issues_github_events" table, a "pull_requests_github_events" table, and many more for the various GitHub event types we consume.

Some batches during high load times can be upwards of many hundreds of events. Again, Timescale has no problem ingesting such a consistently huge amount of data batches and has continued to provide us with excellent service availability for our production environments.

Let's take a closer look at some of the important bits in one of our Timescale tables to get a better understanding of how our data is structured (which will then help us understand how our API queries for this time-series data):

CREATE TABLE IF NOT EXISTS pull_request_github_events (

-- metadata

---------------------------------------------------------------------------

event_id BIGINT,

event_time TIMESTAMPTZ NOT NULL,

repo_name TEXT,

-- event data for pull request events from GitHub

---------------------------------------------------------------------------

pr_action TEXT,

pr_number BIGINT,

pr_title TEXT,

pr_author_login TEXT,

pr_is_merged BOOL,

);

This doesn't look too much different from a normal Postgres table: it has an ID, it has some content, and it has some metadata. But what turns this normal Postgres table into an extremely powerful time-series hypertable is this chunk:

-- make the table a hypertable for time-series data based on the github firehose

-- event_time metadata timestamp

SELECT create_hypertable(

'pull_request_github_events',

'event_time',

if_not_exists => TRUE

);

Here, we're transforming the pull requests table into a hypertable that can perform powerful time-series operations on the "event_time" timestamp column. This way, we can begin to perform fast queries that aggregate time series and histograms to be surfaced in our API.

Integration With the OpenSauced API

Now, on the other end of the spectrum, we want to utilize the time-series data to query for specific things in our API. Because hypertables give us lightning-fast partitioning on our time-series data based on when the event was emitted, we can perform some of the following operations:

SELECT

time_bucket ('1 day', event_time) AS bucket,

count(*) AS star_count

FROM

watch_github_events

WHERE

NOW() - INTERVAL '30 days' <= event_time

AND LOWER(repo_name) = 'timescale/timescaledb'

GROUP BY

bucket

ORDER BY

bucket DESC;

This gives us a time series of one-day "buckets.” Each bucket represents a one-day date and gets a count of the number of stars that the timescale/timescaledb repository received on that day.

Note: This may be a bit confusing if you’re unfamiliar with how the various GitHub APIs work since “watch” events from the perspective of the GitHub Events API are actually “stars.” So, counting watch events counts the number of stars a repository has received over time.

We can see this data being used in the "Stars" chart below alongside other time-series data for the timescaledb repo page, all powered by the OpenSauced API which uses our Timescale data:

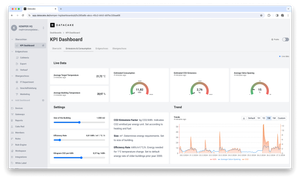

The API powered by Timescale services all other metrics and data in the OpenSauced app. For example, here’s one of my Workspaces that tracks the Neovim ecosystem. It seamlessly gathers information about over 40 repositories, and surfaces information about the contributors, pull requests, and activity over the last 30 days:

Through Timescale, we’re also able to surface information across several of our hypertables that tracks issues, comments, and pull requests for individual contributors. Here’s my Contributor Insight page for the OpenSauced engineering team. Here, we can see where people are contributing, the overall activity over the last 30 days, and more!

Again, all these metrics, with months and months of data across many different hypertables, are all powered through our API by Timescale!

Indexes and Other Postgres Things!

Since, under the hood, Timescale is powered by PostgreSQL, we have taken advantage of many of the other powerful features provided by the underlying database technology that we all know and love. For example, we have built some additional indexes on our pull request table that index on the "event_time" alongside the pull request author and repo name:

CREATE INDEX ON pull_request_github_events (pr_author_login, event_time DESC);

CREATE INDEX ON pull_request_github_events (pr_author_login, repo_name, event_time DESC);

This enables us to have really fast query times on more complex queries like that also select on a specific repository and the pull request’s author user login:

SELECT

time_bucket ('1 day', event_time) AS bucket,

count(*) AS pr_count

FROM

pull_request_github_events

WHERE

NOW() - INTERVAL '30 days' <= event_time

AND LOWER(repo_name) = 'open-sauced/api'

AND LOWER(pr_author_login) = 'jpmcb'

GROUP BY

bucket

ORDER BY

bucket DESC;

This returns a time series of data that looks at pull request events for me in the open-sauced/api GitHub repository. This query is incredibly fast, regardless of having to go through over a month of data, because it hits the "pr_author_login" index we created above. This is a big part of what powers our user profiles on OpenSauced:

Developing AI-Powered Capabilities With pgvector

Recently, we've been exploring retrieval-augmented generation (RAG) using the data we store in Timescale to create an AI piece of our offering called StarSearch, a “Copilot, but for git history”: in short, this is a way for us to perform fast similarity search using pgvector, a Postgres extension supported in Timescale for vector storage, across relevant GitHub events in order to answer end user questions. This way, we can perform complex searches across a wide diversity of events looking at the “unstructured” parts of the data (like pull request titles, pull request content, etc.).

Vectors stored in Timescale are really just a list of numbers: but for most AI / ML applications, these are a list of numbers from an embedding machine learning model that represent the "distance" from one point to the next, i.e., it's a sort of snapshot "understanding" the embedding model has of the input text. Then, using a cosine similarity search (sometimes referred to as “nearest neighbor search”) via pgvector, we can retrieve relevant similar rows in our database based on an input vector embedding.

Again, this unlocks a way for us to find relevant rows in the unstructured data that similarly match an input text or question. We often call this finding a “needle in the haystack” since it’s so good at finding relevant content based on some search query.

We chose to use pgvector on top of PostgreSQL on Timescale for a few reasons, primarily being that we didn’t want to have to replicate a lot of data across multiple databases. We had considered using other vector stores like Qdrant and Pinecone, but we quickly realized that using a completely separate vector database would mean we’d likely need to duplicate a lot of metadata across both of these databases. And because of the very “heavy” nature of each of our individual data units, this would mean replicating potentially hundreds of GBs.

We had also considered coming up with a mechanism to “sync” and “reference” the vectors in a separate vector store with what exists as a row in our Timescale database. This would have worked fine, but it would have required our small team to take on huge amounts of software complexity in order to keep the distributed databases aligned. We had no vested interest in managing or inventing this kind of distributed database technology.

When we discovered pgvector and the extremely good performance it can provide, it quickly became a no-brainer that we would use vectors as an additional column in our database. I recommend reading about how pgvector over the last year alone has had incredible performance increases and only continues to get better. By some estimates, with indexes and tuning of the database parameters, you can start to reach performance levels similar to specialized vector databases.

By using pgvector on our existing Postgres, where our data already lives, we wouldn’t have to manage an additional vector database service, duplicate data, or keep the two databases in sync. We could simply drop vectors into the relevant rows right next to all the data.

Let's look a bit deeper at how we do this!

First, we enable the pgvector extension. Timescale makes this really simple since the extension is already installed on all of the Postgres databases we have provisioned. Easy! Next, we create a new column in our event table that can store the vector embeddings.

CREATE EXTENSION IF NOT EXISTS vector;

ALTER TABLE pull_request_github_events

ADD COLUMN embedding vector(1024);We chose to use a slightly smaller vector size than what most embedding models support in order to save on cost and reduce the amount of compute during vector search. Thankfully, depending on the embedding model you’re using, a slightly reduced vector dimension shouldn’t impede performance too much! This is a calculated trade-off, and for us, this is right around the sweet spot of performance and optimization.

“We've found that storing the embeddings right next to the content in question is a huge win and prevents a lot of metadata duplication you might see with other vector stores.”

Then, we can add some data to our vector embedding column (for the sake of brevity, I’ve taken the liberty to truncate the vector in this example: in the real world, the vector you’d be upserting would be exactly 1,024 numbers long for an embedding column of that size):

UPDATE pull_request_github_events)

SET embedding = ARRAY[0.1, 0.2, 0.3 … 0.7, 0.8, 0.9]::VECTOR

WHERE repo_name = "open-sauced/app"

AND pr_number = 123;This adds a very simple vector of numbers to the "embedding" of an existing row. The embedding would be generated from content surrounding the pull request for that row. We use some additional RAG techniques where we use a large language model (LLM) to summarize the contents of the row, pipe that summary to an embedding model, and then store the vector embedding.

We've found that storing the embeddings right next to the content in question is a huge win and prevents a lot of metadata duplication you might see with other vector stores: no sense in duplicating all that data if we can take advantage of it right in our Timescale Postgres database!

Finally, to perform similarity search, we can run the following query using an input vector as the search criteria (again, truncating for the sake of this example):

SELECT *

FROM pull_request_github_events

ORDER BY embedding <=> '[0.4, 0.5, 0.6 … 0.7, 0.8, 0.9]'::VECTOR

LIMIT 5;This will perform a cosine similarity on the pull requests table, a popular similarity search technique, especially good for textual "distance" searches, that will find the nearest neighbors to the input vector. In our AI application, the input vector to this query would be the embedding from a question provided by an end user. Using that end user’s vector embedding, we can find the top five nearest neighbors that are most closely associated with that input data.

We've further enhanced this by adding a fast vector search index that trades off less accurate recall results for much faster query times:

CREATE INDEX ON pull_request_github_events USING hnsw (embedding vector_cosine_ops);The Hierarchical Navigable Small World (HNSW) algorithm is a very popular choice for nearest neighbor search and is one of the most performant types of indexes for this sort of application. But be warned: With HNSW you trade off some accuracy for speed. So pick a different index algorithm if your similarity searches always need to return the same results! We’re okay with the query vector returning slightly different results since we’re aiming for speed and we’re okay with our AI application having some nuance and variance in its answers.

Finally, we can use these results with a generative AI (most often a large language model like GPT-4, Mistral, or Claude) in its context window to provide additional, relevant information based on the input user's query and the query embedding. This is the "RA" part of "RAG" where we are retrieving relevant information and augmenting the input prompt to the large language model. This ends up being a very powerful technique to inform an LLM about information that it might be missing or would never have context on.

“By augmenting an LLM prompt with relevant information from our pull requests events table in Timescale using pgvector, we’ve been able to unlock a whole new kind of insight for our end -users.”

How does this perform? With thousands and thousands of new events every day, does pgvector have any problem returning relevant and performant results? As of today, we’re at over 100 million vectors, and that number continues to grow. And yet, we usually see results in under 500 ms, often faster than 300 ms. And, for our use case, anything under one second is blazingly fast. Overall, we have been quite impressed with the performance of the HNSW algorithm and Timescale’s ability to still work under our production load.

So, the actual end-user flow would be:

- A user asks OpenSauced’s StarSearch a question.

- The question gets embedded by an embedding model: this represents the snapshot “understanding” of the input question from the user.

- Using that vector embedding generated from the end user, we can find the nearest neighbors in the Timescale vector store and return five rows very quickly.

- These rows from the database are used in the RAG application to inform the context to the large language model to then answer the question back to the user.

We can see that in action in our StarSearch application:

Here, based on my input query asking about people who know Tailwind CSS and Rust, we can see that it finds relevant information in our cosine search and answers the nuanced question with confidence. By augmenting an LLM prompt with relevant information from our pull requests events table in Timescale using pgvector, we’ve been able to unlock a whole new kind of insight for our end users. Very powerful!

All in all, Timescale has been an extremely powerful and important integration for our small team. Thanks to the speed and reliability of our Timescale service, we've been able to move very quickly on some big ideas.

If you’re just getting started with Postgres or building AI applications on top of Timescale, I’d recommend a few of the following resources:

- The official Postgres docs and tutorials

- This tutorial from Timescale on using pgvector

- The official pgvector docs are a wealth of great resources!

If you’d like to connect, feel free to reach me at:

https://twitter.com/johncodezzz

https://www.linkedin.com/in/jpmcb/

Be sure to also check out the work I’ve been doing on my profile over at OpenSauced! And join the waitlist for StarSearch for early access!

We’d like to thank John and the rest of the OpenSauced team for sharing their story on how they use Timescale to power open-source metrics dashboards and pgvector, in particular, to power retrieval-augmented generation for their “Copilot for git history,” StarSearch.

We’re always keen to feature new community projects and stories on our blog. If you have a story or project you’d like to share, reach out on Slack (@Ana Tavares), and we’ll go from there.