TimescaleDB 2.3: Improving Columnar Compression for Time-Series on PostgreSQL

TimescaleDB 2.3 makes built-in columnar compression even better by enabling inserts directly into compressed hypertables.

Time-series data is relentless. In order to measure everything that matters, you need to ingest thousands, perhaps even millions, of data points per second. The more data you’re able to collect, the more in-depth your analysis is bound to be. Yet storing and analyzing this data does not come for free, and volume of time-series data can lead to significant storage cost.

TimescaleDB addressed this storage challenge through a novel approach to database compression. Rather than just compressing entire database pages using a single standard compression algorithm, TimescaleDB uses multiple best-in-class lossless compression algorithms (which it chooses automatically based on the type and cardinality of your data), along with a unique method to create hybrid row/columnar storage. This provides massive compression savings and speeds up common queries on older data.

We first introduced compression in TimescaleDB 1.5 amidst much excitement about how a row-oriented database could support columnar compression. Users were delighted with 92-96 % compression rates on their time-series data (like jereze and Vitaliy), leading to both significant storage and cost savings for their databases, as well as query performance improvements. Our approach didn’t just save users storage (and thus money), but it made many queries faster!

(These performance improvements arise because high compression rates require less data to be read from disk, and also because TimescaleDB can now read specific compressed columns from disk [rather than entire rows], and special placement optimizations further colocate commonly-requested data.)

But TimescaleDB’s columnar compression came with some useability limitations, specifically that compressed chunks were immutable once created: You couldn’t alter the schema of compressed hypertables, and you couldn’t insert or modify compressed chunks. Earlier this year, in TimescaleDB 2.1, we addressed much of the first limitation by enabling users to add and rename columns in compressed hypertables.

We’re excited that today’s release makes it even easier for developers to work with compressed hypertables. TimescaleDB 2.3 includes some key improvements to our native compression, making it suitable for almost every use case:

- You can now directly INSERT into compressed hypertables (vs decompressing, inserting, and then re-compressing chunks manually).

Read on for more about these improvements to native compression in TimescaleDB 2.3, including the how and the why.

If you’re new to TimescaleDB and want to get started today, create a free account to get started with a fully managed TimescaleDB instance (100 % free for 30 days).

If you are an existing user:

- Timescale: TimescaleDB 2.3 is now the default for all new services on Timescale, and any of your existing services will be automatically upgraded during your next maintenance window.

- Self-managed TimescaleDB: Here are upgrade instructions to upgrade to TimescaleDB 2.3.

Join our Slack community to share your results, ask questions, get advice, and connect with other developers (our co-founders, engineers, and passionate community members are active on all channels).

You can also visit our GitHub to learn more (and, as always, ⭐️ are appreciated!) And, if these are the types of challenges you’d like to help solve, we are hiring!

Shoutout to all the engineers who worked on these features: Gayathri Ayyappan, Sven Klemm, Markos Fountoulakis, and Mats Kindahl, and the entire team of reviewers and testers!

Inserting data into compressed hypertables

TimescaleDB’s native compression works by keeping recent data as standard uncompressed database rows, which allow them to be written into the database at very high rates. Then, after some time, the rows are compressed into TimescaleDB’s columnar format according to a compression policy. (More specifically, a TimescaleDB hypertable is partitioned into chunks based on rows’ timestamps, and once the chunk is older than, say, 3 days old, the entire chunk gets compressed.)

Before TimescaleDB 2.3, you couldn’t insert into these chunks once compressed. In most cases, this works fine, as many time-series workloads write only recent data (and the user can then specify a “3 day” or “1 month” threshold for compression based on their needs).

But sometimes this isn’t sufficient. You might need to insert older data into those compressed chunks on an irregular basis. For example, if some missing or delayed records are added to your data. Or you might want to do a one-off bulk import of supplemental data, e.g., you run a building management platform, and you want to add the historical records of a specific building into your database, or you are storing tick data for crypto or fintech applications, and you are importing historical information about a new symbol.

So in prior versions of TimescaleDB, you could only perform these backfills to compressed hypertables by going through several manual steps: you first had to identify which chunks your data fit into, decompress the chunks in question, then perform the INSERT of data into those chunks and finally, re-compress the chunks in question.

These steps can be tedious, especially when it involves multiple chunks and the chunks are not contiguous. (We previously offered some helper functions involving temp tables for such large backfills, but the user experience still wasn’t straightforward.)

From TimescaleDB 2.3 onward, you can now perform INSERTs directly into hypertables with compressed chunks, much like writing to any standard database table.

Here’s how it works

The problem

When you compress a chunk, the data from the original chunk is stored in an internal chunk in compressed form. This poses a problem, as you cannot directly insert a new row into the chunk as it is in compressed form.

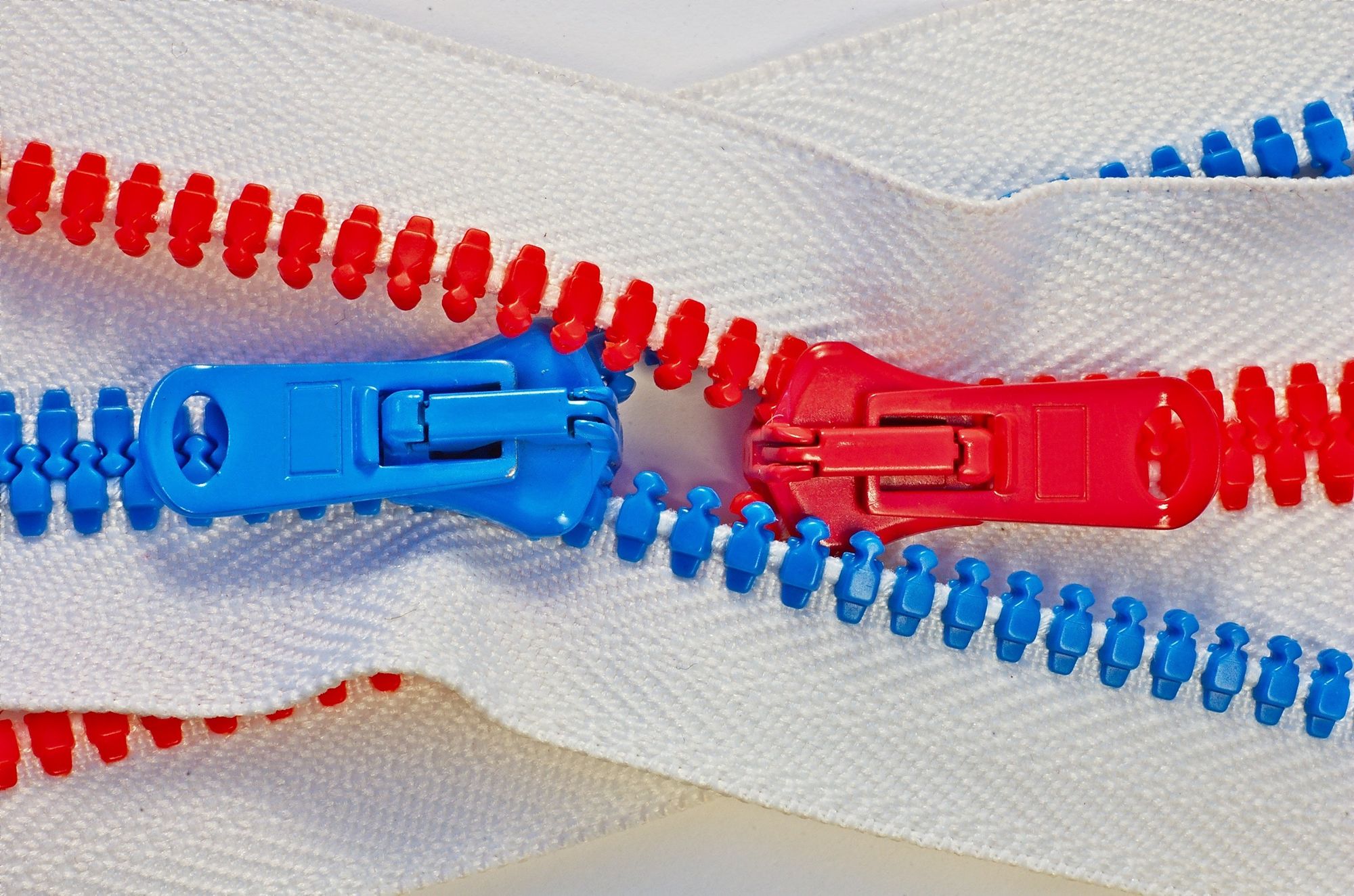

As an analogy, say you have a big array of integers, with integers in the range (1,10000) and you compress them using delta encoding, so that they are efficiently encoded and packed contiguously as a single data tuple. Now, you want to insert a new integer to this compressed series—say the number 120. How do you add this new value to the already compressed array? There’s no room for it in the physical representation of the compressed array!

This is similar to the problem we have with inserting data into compressed chunks in TimescaleDB. Except that, we now have multiple columns that could have compressed data. That's why we initially could not support inserts after a chunk is compressed and required the intermediate steps of decompressing, performing the insert, and then re-compressing the chunk.

How we solved the problem

When you insert a new row into a chunk that has been compressed, we compress this single row and save it in compressed form in the internal chunk. This is why inserting into a compressed chunk has a very small performance penalty compared to inserts to uncompressed chunks: because we are compressing these new rows in a row-by-row manner as they are written. (Thus, if you are doing a bulk backfill of a large amount of historical data, we still recommend our existing backfill approach for higher ingest performance.)

However, that is not the whole story, as INSERTs into a table also have to respect constraints, triggers, etc., that are defined on that table. We make sure that these work exactly as expected when inserting into a previously compressed chunk.

Typically after compression, the compressed chunks compress many rows together for greater storage savings. Basically, if a row has a timestamp, label, and value, then we build arrays with 1,000 rows of data, so that the 1,000 timestamps are grouped and compressed together using a compression algorithm that works well for timestamps, the corresponding 1,000 labels are grouped and compressed using a string compression algorithm, and likewise for the 1,000 metrics. It’s a bit more complex than that based on the segment-by and order-by settings defined on that hypertable, but that’s the basic idea.

But since we now have compressed data that contains only 1 row of tuples, we need to periodically recompress the chunk. This way, we can combine these individually inserted rows into the existing compressed batch of rows and not lose the storage savings from compression. But because this processing happens asynchronously and as a batch, it’s much more efficient than doing it on every insert.

This re-compression of chunks to merge individual compressed rows is executed automatically (i.e., no user intervention is required) as part of TimescaleDB’s job scheduling framework. Automated compression policies in TimescaleDB run according to a defined schedule, so we designed the re-compression step keeping in mind the fact that the compression policy will run again at some point in the near future.

Leveraging asynchrony and batching

With this approach, users just insert data into a compressed hypertable like any other database table for a smooth developer experience, while our approach leverages asynchrony and batching for greater efficiency.

Consider the alternate approach for handling inserts: identify the "compressed row" in the internal chunk that can accommodate this newly inserted row (i.e., the set of 1000 tuples that got compressed together), decompress the set of tuples in this compressed row, add the new individual row to this set, and then compress the result again.

This approach would be extremely inefficient and have very bad performance. But by amortizing the cost of decompression across many individual rows and inserts and performing it only once in an asynchronous manner, our approach supports both higher insert rates and is much more resource efficient.

See our documentation for a list of current limitations to inserting data into compressed hypertables and more information on using the feature.

Conclusion

TimescaleDB 2.3 makes native compression even better, enabling users to insert data directly into compressed chunks in hypertables.

These new features work together to give you that seamless developer experience with TimescaleDB. It’s PostgreSQL with superpowers for time-series data. And we want to take on the complexity of managing time series at scale (even trillions of rows of data), so you don’t have to worry about it.

We’re always working to improve TimescaleDB. We also welcome suggestions or discussions on our GitHub. And if you are interested in what we’re working on next, please see our public release notes and future plans. Feedback is always welcome!

Learn more and get started

If you’re new to TimescaleDB, create a free account to get started with a fully managed TimescaleDB instance (100 % free for 30 days).

If you are an existing user:

- Timescale: TimescaleDB 2.3 is now the default for all new services on Timescale, and any of your existing services will be automatically upgraded during your next maintenance window.

- Self-managed TimescaleDB: Here are upgrade instructions to upgrade to TimescaleDB 2.3.

Join our Slack community to share your results, ask questions, get advice, and connect with other developers (our co-founders, engineers, and passionate community members are active on all channels).

You can also visit our GitHub to learn more (and, as always, ⭐️ are appreciated!) And if these are the types of challenges you’d like to help solve: we are hiring!