Downsampling in the Database: How Data Locality Can Improve Data Analysis

Data processing can be a resource-intensive task. And, as datasets grow in size and complexity, the need for efficient data processing and downsampling becomes even more critical, enabling businesses to access information quickly to identify issues and make data-driven decisions early on, ultimately leading to cost and time savings.

One way to optimize your data processing is by improving data locality when downsampling your data.

Let’s break down these concepts: downsampling data aims to make data more manageable by reducing its granularity. Data locality, on the other hand, is processing data as close as possible to where it’s stored—and nothing can be closer than on the same server.

So, in this blog post, we’ll perform calculations close to the data—directly in the database—to optimize data processing. We’ll evaluate its benefits and compare it to processing in the client. Finally, we’ll explore Timescale hyperfunctions as an alternative so we don’t have to pull data from a database to an analytics framework hosted in your programming language.

Downsampling Decisions and My Path to Data Locality

Developers make decisions about downsampling and where to process data every day, whether or not they realize it. I remember my early days as a Ruby developer when I always tried to write beautiful code—I wanted it to look like poetry. I didn’t worry too much about the most efficient way to do things, but I strived to achieve good readability and meet business requirements.

Moving to the financial markets sector represented a huge shift. I started building trading systems in which speed was a requirement, and after days and months of optimizing code for faster trading decisions, I started seeing things very differently. Later, I joined a fast-growing company to use my code-optimization skills and carefully review several services and background jobs fighting for resources.

My perspective on expensive processing architecture expanded, as my job’s most common task was benchmarking and checking metrics around resource consumption. Every move was made to optimize the system to become more scalable: my career went from carefree coding to explicitly benchmarking my choices and analyzing the performance of each refactoring or improvement.

My competence as a programmer grew alongside the systems I worked on. I deepened my knowledge about how programming languages can use resources behind the scenes. Now, my internal checklist still looks for performance issues, and, as a user, I celebrate well-performing systems.

So, I am well aware that as datasets grow in size and complexity, the trade-offs associated with data processing and downsampling become more critical. Engineering departments welcome data teams and analysts, and eventually, the hardware infrastructure is expanded to supply the new demand.

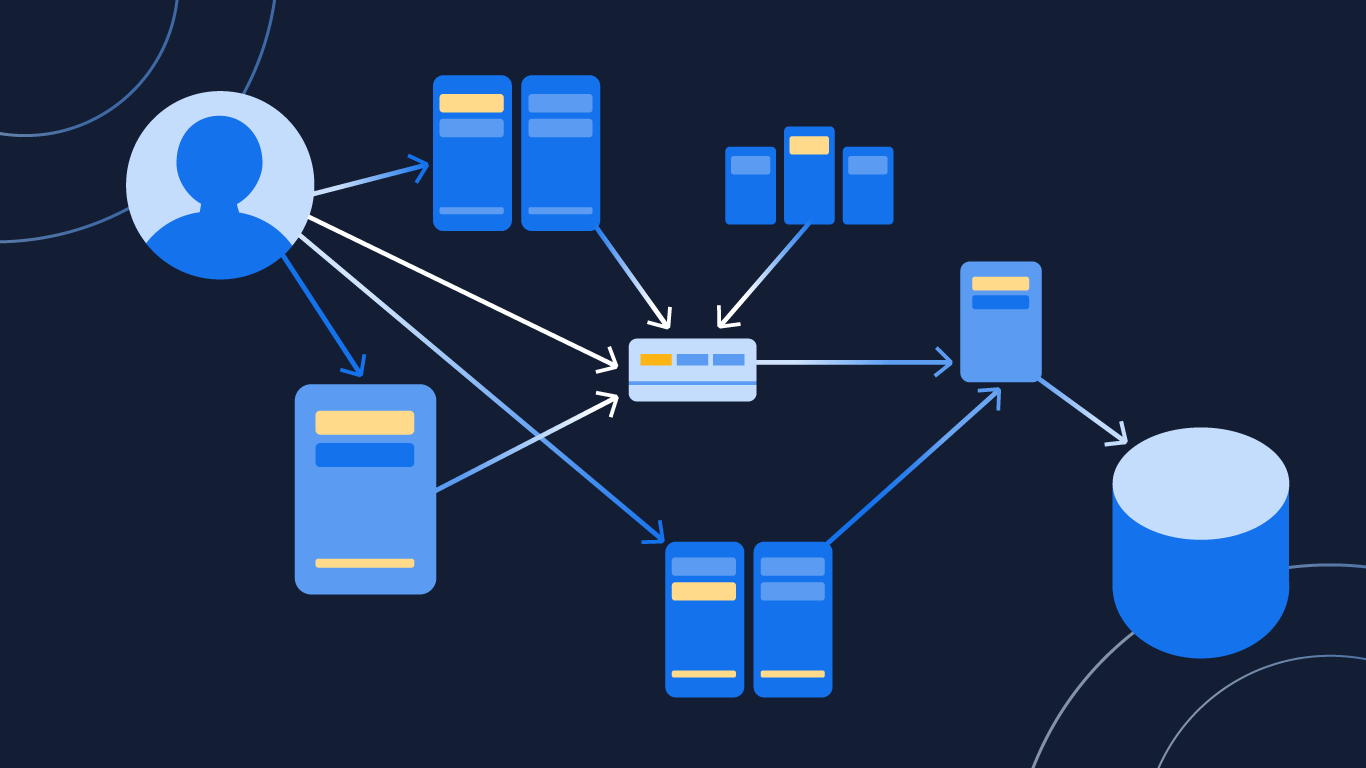

With engineers carefully analyzing the infrastructure costs and metrics, they identify bottlenecks. Input/output (I/O) blocking is a common misusage that can lead to idle threads waiting for the network while other threads starve or deadlock. As the system scales for more users, new resources can unbalance, and you need a new round of architecture review not to depreciate the user experience.

That’s where latency comes into play.

Latency

Latency refers to the time it takes for information to travel from one point in a system resource to another. It includes both network latency (the time it takes for data to travel over a network) and processing latency (the time it takes for information to be processed by an application).

By performing calculations close to the data and downsampling in the database, you can reduce overall latency, positively impacting the user experience and the overall system performance metrics, such as response time or throughput rates. It also helps to keep your data clean and consistent across different nodes in your distributed system.

But first, let’s see how data travels.

How Data Travels

It’s almost impossible to list all the computational steps involved in data processing that are hidden and often forgotten by developers, from querying in the database to visualizing data in the system.

Summing it up, everything starts with the low-level steps the database engine takes to bring data tuples from a SQL query.

First, the parser reads your query and transforms it into an abstract syntax tree. If there’s room for simplification, the tree is simplified to make the routes more accessible. The query tree is a set of nodes and child nodes representing paths and constraints where the data comes from, almost like a travel plan.

You can fetch data in many ways, and the planner calculates the cheapest route to get the target data. A route plan is chosen in a few milliseconds, and the executor takes over the cheapest plan to fetch the data. When the data tuples are in the memory, a serializing process allows the data to transit in the network.

The network talks in its own protocol, which wraps the data into network packets shielded with security layers. At the speed of light, the data packets are safely crossed under the oceans through optical fibers, choosing the most optimized routes to arrive as early as possible in the target machine. Crossing a continent can cost a few hundred milliseconds.

The data arrival in the target processing unit also takes some extra time: data passes through “boarding gates” to confirm that its identity is trustable, its packet consistency is verified, and finally, if it’s still relevant, the unpacking process takes place. With unpacked data in the I/O, the data is routed to the database client driver before the deserialization process starts loading the data in the processing unit, which is another programming language.

We'd need to put the programming language layer under another microscope to analyze how the data is streamed to the context. Indeed, data is not alone. Taking Ruby as an example, it would often arrive in an expensive processing context, bloated by frameworks and other runtime facilities optimized for developers’ productivity but overlooking the computational resources.

Each programming language has its own focus and benefits. The Energy Efficiency across Programming Languages study can help you dive deeper into the topic. “Table 4. Normalized global results for Energy, Time, and Memory” unifies several benchmark scenarios that can inspire you to make environmentally friendly choices. You'll notice that C and Rust, in which PostgreSQL and hyperfunctions are written, are incredibly energy efficient—they can do more while consuming fewer CPU cycles.

Downsampling in the Database: How the Timescale Hyperfunctions Can Help

We created hyperfunctions (which are provided by the TimescaleDB Toolkit extension and come already pre-installed into Timescale) to bring data analysis superpowers to SQL. Increasing data processing power and speed can help you process significant amounts of data more quickly and accurately.

Hyperfunctions are a set of mathematical functions and algorithms written in Rust and packaged as a PostgreSQL extension. They work incredibly well with Timescale and TimescaleDB, aiming to increase data processing efficiency with a first-class PostgreSQL experience.

The bounded functions are available in the database and can be combined with standard SQL queries. So, instead of allocating resources from your system application and competing with users trying to access the system, data processing can be delegated to the database and easily scaled with database replicas.

Adding data processing features to the application layer can make the development phase challenging, messing with other development tasks and the deployment of features. Moving the analysis to the database will allow more flexibility to the data analysts and relieve the application workload, thus releasing I/O waiting time with fewer context-switching threads. Finally, it will also reduce the network traffic between the application and the database.

The challenge is more about changing the team’s mindset than adding a new layer of complexity.

Comparing Downsampling Data in Ruby vs. Timescale

It’s time to jump to a practical scenario. Let’s see how data processing in Ruby compares to doing it in the database—analyzing how the processing decisions can affect the application performance and user experience.

The scenario we’re testing here is the Largest Triangle Three Buckets function, a downsampling method that tries to retain visual similarity between the downsampled data and the original dataset. If you’re curious about the LTTB algorithm and its Ruby implementation, check the LTTB tutorial that implements the Ruby code from scratch.

While most frameworks implement the code in the front end, Timescale provides an implementation that takes (timestamp, value) pairs, sorts them if needed, and downsamples the values directly in the database.

The following animation shows Ruby and SQL endpoints downsampling 400,000 records from an open weather dataset—downsampling 40 years of Vienna temperatures to 500 points for a readable visualization. The data is displayed as it becomes available.

Regardless of using Ruby, downsampling the pair values alone would save approximately 13 MB in the network, resulting in 17 KB, which is less than 1 % of the original request. By reducing the amount of data we send we also reduce the amount of data we process at the other end, reducing CPU and memory requirements as well.

When we put it all together the SQL version powered by hyperfunctions uses only about 17 % of the time compared to Ruby, considering Ruby took 3.5 seconds of processing time to downsample 400,000 points to 500 points. If you were patient enough to see the GIF until the end, you could see that the zoom feature refreshed and reloaded 500 points again to keep the exact data resolution.

With less data, it will reduce the discrepancy between both sides. The more data your system stores, the more advantageous it will be to process it closer to the database.

CPU Bound vs. I/O Bound

The choice of downsampling or not the data in the database will always be a trade-off between CPU and I/O. If you choose to process data on your database, it will still process and delegate the work to the database. Depending on your application’s bound, you will better balance your resources by splitting the work and delegating some processing to the database.

This means that if your database is working solely like a storage layer and dedicated to persisting data, there’s a high probability that the CPU and memory are underused, and you could leverage a bit of the hardware utilization with this method.

Plus, depending on the requirements of your system, you can process data on replicas with minimal impact on the primary database.

Timescale + Hyperfunctions = Dizzying Downsampling Speed

The Timescale Team is busy carefully designing hyperfunctions to increase the time-series superpowers that Timescale already delivers to vanilla PostgreSQL. Compression, table partitioning, and continuous aggregates work with most hyperfunctions. Also, several are already parallel-safe, leveraging hypertable chunks to process data even faster.

In conclusion, we’ve seen how data processing can be resource-intensive, and its efficiency is becoming increasingly crucial as datasets grow in size and complexity. Successful businesses will need to do it fast to accelerate data-driven decisions.

Timescale hyperfunctions fill this gap with a large set of statistical functions to empower your data processing layer, allowing better data locality as your calculations get closer to the data itself.

We’ve explored the benefits of downsampling in the database compared to processing in Ruby. We’ve also shown how Timescale optimized hyperfunctions for large datasets and parallel safety. Bringing the data processing closer to the database will not only relieve your system resources but also save you money and give you more and happier users with the same computational power.

We hope we’ve opened your mind to this approach. If you want to try it out, get going quickly with Timescale, our cloud database platform (30-day free trial, no credit card required), and explore hyperfunctions with TimescaleDB.