A Beginner’s Guide to Vector Embeddings

Vector embeddings are essential for AI applications like retrieval-augmented generation (RAG), agents, natural language processing (NLP), semantic search, and image search. If you’ve ever used ChatGPT, language translators, or voice assistants, there’s a high chance you’ve come across systems that use embeddings.

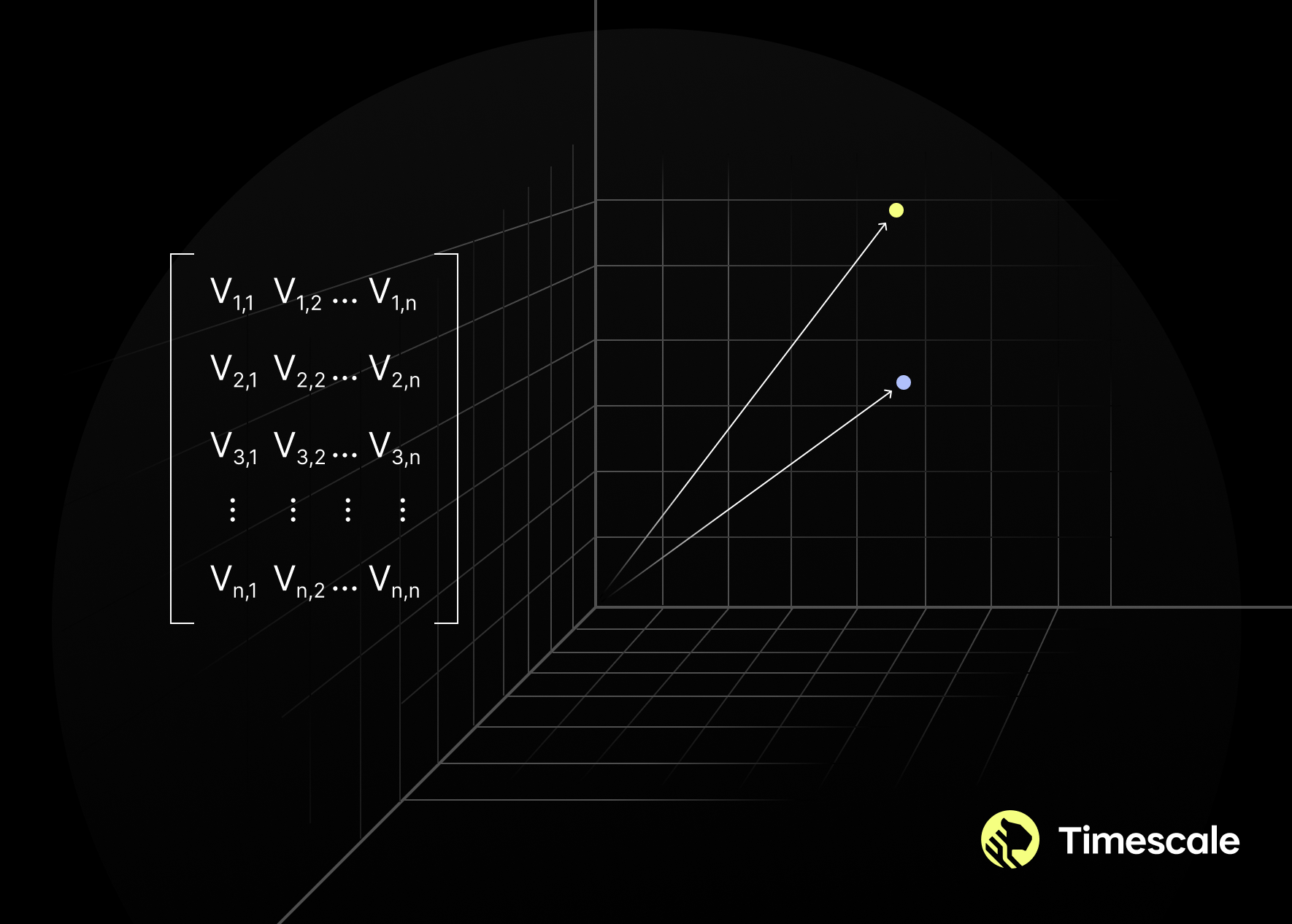

An embedding is a compact representation of raw data, such as an image or text, transformed into a vector comprising floating-point numbers. It’s a powerful way of representing data according to its underlying meaning. High-dimensional data is mapped into a lower-dimensional space (think of it as a form of “lossy compression”) that captures structural or semantic relationships within data, making it possible for embeddings to preserve important information while reducing the computational burden that comes with processing large datasets. It also helps uncover patterns and relationships in data that might not have been apparent in the original space.

Since an embedding model represents semantically similar things close together (the more similar the items, the closer the embeddings for those items are placed in the vector space), you can now have computers search for and recommend semantically similar things and cluster things by similarity with improved accuracy and efficiency.

In this article, we’ll examine vector embeddings in depth, including the types of vector embeddings, how neural networks create them, how vector embeddings work, and how you can create embeddings for your data.

Are vectors and embeddings the same thing?

While both terms are used interchangeably and refer to numerical data representations where data points are represented as vectors in high-dimensional space, they’re not the same thing. Vectors are simply an array of numbers where each number corresponds to a specific dimension or feature, while embeddings use vectors for representing data in a structured and meaningful way in continuous space.

Embeddings can be represented as vectors, but not all vectors are embeddings. Embeddings generate vectors, but there are other ways of generating them, too.

What is the difference between indexing and embedding?

Embedding is the process of turning raw data into vectors, which can then be indexed and searched over. Meanwhile, indexing is the process of creating and maintaining an index over vector embeddings, a data structure that allows for efficient search and information retrieval from a dataset of embeddings.

Types of Vector Embeddings

There are many different kinds of vector embeddings, each representing a different kind of data:

- Word embeddings: This is the most common type of embedding and represents words in NLP. They’re typically used for capturing semantic relationships between words (such as antonyms and synonyms) and contextual usage in tasks like language translation and modeling, word similarity, synonym generation, and sentiment analysis. They also help enhance the relevance of search results by understanding the meaning of queries.

- Sentence embeddings: These are vector representations of sentences and capture their semantic meaning and context. They’re used for tasks such as information retrieval, text categorization, and sentiment analysis. Sentence embeddings are also important for chatbots, allowing them to better understand and respond to user inputs as well as machine translation services, ensuring that the translations retain the context and meaning of the original sentence.

- Document embeddings: Like sentence embeddings, document embeddings are vector representations of documents like reports or articles. They capture the content and general meaning of the document and are used for tasks like recommendation systems, information retrieval, clustering, and document similarity and classification.

- Graph embeddings: These embeddings represent edges and nodes of graphs within the vector space and are used for tasks such as node classification, community recognition, and link prediction.

- Image embeddings: These are representations of different aspects of visual items like video frames and images. From individual pixels to full images, image embeddings classify image features and are used for tasks like content-based recommendation systems, image and object recognition, and image search systems.

- Product embeddings: Product embeddings can range from embeddings for digital products like songs and movies to physical products like shampoos and phones. These are useful for product recommendation (based on semantic similarity) and classification systems, and product searches.

- Audio embeddings: These embeddings are a representation of the different features of audio signals, such as the rhythm, tone, and pitch, in a vector format. They’re then used for various applications such as emotion detection, voice recognition, and music recommendations based on the user’s listening history. They’re also essential for developing smart assistants that understand voice commands.

What types of objects can be embedded?

Many kinds of data types and objects can be represented as vector embeddings. Some of the common ones include:

- Text: Documents, paragraphs, sentences, and words can be embedded into numerical vectors using techniques like Word2Vec (for word embeddings) and Doc2Vec (for document embeddings.

- Images: Images can be embedded into vectors using methods like CNNs (Convolutional Neural Networks) or pre-trained image embedding models like ResNet and VGG. These are commonly used in e-commerce applications.

- Audio: Audio signals like music or speech can be embedded into numerical representations using techniques like RNNs (Recurrent Neural Networks) or spectrogram embeddings. They capture auditory properties, making it possible for systems to interpret audio more effectively. Some common audio embedding applications include OpenAI Whisper and Google Speech-to-Text.

- Graphs: Edges and nodes in a graph can be embedded using techniques like graph convolutional networks and node embeddings to capture relational and structural information. Nodes in a graph represent entities like a person, product, or web page, and each edge represents the connection or link between those entities.

- 3D models and time-series data: These embeddings capture temporal patterns in sequential data and are used for sensor data, financial data, and IoT applications. Their common use cases include pattern identification, anomaly detection, and time series forecasting. Meanwhile, 3D model embeddings represent different geometric aspects of 3-dimensional objects and are used for tasks like form matching, objection detection, and 3D reconstruction.

- Molecules: Molecule embeddings that represent chemical compounds are used for molecular property prediction, drug discovery and development, and chemical similarity searching.

How Do Neural Networks Create Embeddings?

Neural networks, including large language models like GPT-4, Llama-2, and Mistral-7B, create embeddings through a process called representation learning. In this process, the network learns to map high-dimensional data into lower-dimensional spaces while preserving important properties of the data. They take raw input data, like images and texts, and represent them as numerical vectors.

During the training process, the neural network learns to transform these representations into meaningful embeddings. This is usually done through layers of neurons (like recurrent layers and convolutional layers) that adjust their weights and biases based on the training data.

The process looks something like this:

Neural networks often include embedding layers within the network architecture. These receive processed data from preceding layers and have a set number of neurons that define the dimensionality of the embedding space. Initially, the weights within the embedding layer are initialized randomly and then updated through techniques like backpropagation. These weights initially serve as the embedding themselves, and then they gradually evolve during training to encode meaningful relationships between input data points. As the network continues to learn, these embeddings become increasingly refined representations of data.

Through iterative training, the neural network refines its parameters, including the weights in the embedding layer, to better represent the meaning of a particular input and how it relates to another piece of input (like how one word relates to another). Backpropagation is used to adjust these weights along with other weights depending on whether the overall task involves image classification, language translation, or something else.

The training task is essential in shaping the learned embeddings. Optimizing the network for the task at hand forces it to learn embeddings that capture the underlying semantic relationships within the input data.

Let’s take an example to understand this better. Imagine you’re building a neural network for text classification that determines whether a movie review is positive or negative. Here’s how it works:

- In the beginning, each word in the vocabulary is randomly assigned an embedding vector that represents the essence of the word in numerical form. For instance, the vector for the word “good” might be [0.2, 0.5, -0.1], while the vector for the word “bad” might be [0.4, -0.3, 0.6].

- The network is then trained on a dataset of labeled movie reviews. During this process, it learns to predict the sentiment of the review based on the words used in it. This involves adjusting the weights, including the embedding vectors, to minimize errors in sentiment prediction.

- As the network continues to learn from the data, the embedding vectors for words are adjusted to better perform sentiment classification. Words that often appear together in similar contexts, like “good” and “excellent,” end up with similar embeddings, while words with opposite meanings, like “terrible” and “great,” have embeddings that sit further apart, reflecting their semantic relationships.

How Do Vector Embeddings Work?

Vector embeddings work by representing features or objects as points in a multidimensional vector space, where the relative positions of these points represent meaningful relationships between the features or objects. As mentioned, they capture semantic relationships between features or objects by placing similar items closer together in the vector space.

Then, distances between vectors are used to quantify relationships between features or objects. Common distance metrics include Euclidean distance, cosine similarity, and Manhattan distances and measure how “close” or how “far” vectors are to each other in the multidimensional space.

Euclidean distance measures the straight-line distance between points. Meanwhile, cosine similarity measures the cosine of the angle between two vectors. The latter is often used to quantify how similar two vectors are, regardless of their magnitudes. The higher the cosine similarity value, the more similar the vectors.

Consider a word embedding space where words are represented as vectors in a two-dimensional space. In this space:

- The word “cat” might be represented as [1.2, 0.8]

- The word “dog” might be represented as [1.0, 0.9]

- The word “car” might be represented as [0.3, -1.5]

In this example, the Euclidean distance between the words “cat” and “dog” is less than the distance between the words “car” and “cat”, indicating that “cat” is more similar to “dog” than to “car.” Meanwhile, the cosine similarity between “cat” and “dog” is also higher than the similarity between “cat” and “car”, which further indicates their semantic similarity.

What you can create with embeddings (as a developer)

- Chatbots that use retrieval augmented generation to better answer user queries, generate contextually relevant responses, and maintain coherent conversations.

- Semantic search engines that can retrieve multimedia content, web pages, or documents based on semantic similarity instead of keyword matching for more relevant search results.

- Text classification systems that categorize documents based on phrases and words.

- Recommendation systems that recommend content depending on the similarity of keywords and descriptions.

You can also use embeddings for data preprocessing tasks like language translation, sentiment analysis, normalization, and entity recognition. Plus, embeddings can allow GenAI models to generate more realistic content, whether that’s images, music, or text.

Similarly, you can create application experiences with retrieval-augmented generation.

Embedding-based RAG systems combine the benefits of both large language model (LLM) generation-based and retrieval-based approaches. For instance, in a support assistant application, you can use embeddings to retrieve relevant context related to the customer and then have an LLM generate responses based on the retrieved context to give the customer a more personalized and useful support response.

How to Create Vector Embeddings for Your Data

Creating vector embeddings of your data is also commonly called vectorization. Here’s a general overview of the vectorization process:

- The first step is to collect the raw data that you want to process. This could be text, audio, images, time series data, or any other kind of structured or unstructured data.

- Then, you need to preprocess the data to clean it and make it suitable for analysis. Depending on the kind of data you have, this may involve tasks like tokenization (in the case of text data), removing noise, resizing images, normalization, scaling, and other data cleaning operations.

- Next, you need to break down the data into chunks. Depending on the type of data you’re dealing with, you might have to split text into sentences or words (if you have text data), divide images into segments or patches (if you have image data), or partition time series into intervals or windows (if you have time series data).

- Once you preprocess the data and break it into suitable chunks, the next step is to convert each chunk into a vector representation, a process known as embedding.

While different techniques are used to embed different kinds of data, you usually call an embedding model via an API to create vector representations:

- For text data, some popular choices are OpenAI’s text-embedding-3 models, Google Gemini’s textembedding-gecko models, Cohere’s Embed models. Other options include SentenceTransformers, FastText, GloVe, and Word2Vec, which all create vector representations of words and sentences.

- For image data, CNNs like VGG and Inception or CLIP from OpenAI can be used to extract feature vectors from images.

- For audio, you can use Spectrogram.

If your data is already in PostgreSQL, you can simply use pgvectorizer, which is part of Timescale’s Vector Python library and makes it simple to manage embeddings. In addition to creating embeddings from data, pgvectorizer keeps the embedding and relational data in sync as data changes, allowing you to leverage hybrid and semantic search within your applications.

Example: Creating text embeddings using OpenAI’s text-embedding API

Before you start, you first need to head over to the OpenAI website and create your API key.

Then, to create your own embeddings, make sure you install the OpenAI Python package.

!pip install openai

Next, import the OpenAI class from the module:

from openai import OpenAINext, create an instance of the OpenAI class (we’ll call it client) and initialize it with your API key to authenticate and authorize access to the API:

client = OpenAI(api_key="YOUR_API_KEY")Then, as defined in the API docs, we use the client.embeddings.create() method to generate embeddings for a given text. We also need to specify the model, enter the input, and choose the encoding format before sending the request:

# Generate an embedding for a given input sentence

response = client.embeddings.create(

model="text-embedding-3-small",

input="How to create vector embeddings using OpenAI",

encoding_format="float"

)

#Extract the embedding vector

embedding = response['data'][0]['embedding']The result you get is the vector embeddings for the provided text, and looks something like this:

How to Store Vector Embeddings

Traditional databases are not enough to handle the complexity of vector data, making it difficult to analyze it and extract meaningful insights. This is where vector databases come in—these are specialized databases designed to handle vectors and can efficiently store and retrieve vector embeddings.

A great way to store vector embeddings is using PostgreSQL since it allows you to combine vector data with other kinds of relational and time-series data, like business data, metrics, and analytics. While PostgreSQL wasn’t originally designed for vector search, extensions like pgvector make it possible to store vectors in a regular PostgreSQL table in a vector column, along with metadata. It’s a powerful extension for storing and querying vectors and enables applications like RAG, NLP, computer vision, semantic search, and image search.

Start Creating Vector Embeddings Today

You now know everything you need to know to get started with creating vector embeddings. In this article, we’ve talked about what vector embeddings really are, their types, how they work, and how neural networks create embeddings.

You now also know that applications involving LLMs, semantic search, and GenAI all rely on vector embeddings to power them, making it important to learn how to create, store, and query vector embeddings.

If you’re ready to get started, store vector embeddings on Timescale today with pgai on Timescale.

Sign up today for a free 90-day trial.