How to Reduce Bloat in Large PostgreSQL Tables

Written by Dylan Paulus and Carlota Soto

When dealing with large Postgres tables, it becomes increasingly important to adopt good Postgres maintenance practices to keep your performance high. Among all the PostgreSQL performance tuning you can do, there’s a very particular aspect related to Postgres storage that should be top of your list: monitoring and reducing table bloat.

Table bloat is a silent killer in large PostgreSQL tables, especially in highly transactional production databases. If left unmonitored, it will progressively slow down your queries and consume your disk space, so it is essential to deal with it when scaling Postgres.

In this article, we will explore how you can do this by introducing the vacuum and autovacuum process and discussing how you can monitor and reduce bloat regularly. We also explain how bloat relates to partitioned tables, including special advice.

Optimizing Large PostgreSQL Tables: What Is Table Bloat?

In a previous article on this topic, we explained how table bloat essentially refers to the excess space consumed by a table in a PostgreSQL database beyond what is actually necessary to store its data and indexes. This implies that when you’ve been running transactions on a PostgreSQL table for a while, it may start to get inflated (or bloated), taking more disk space than it’s supposed to.

This happens due to the way PostgreSQL manages data modifications. PostgreSQL uses a mechanism called Multi-Version Concurrency Control (MVCC) which ensures that while one transaction is making changes to a table, other transactions can still read the previous version of the table. Instead of overwriting the old data, PostgreSQL keeps a new version of the row and marks the old one as "dead". Over time, as transactions accumulate, these dead rows (or dead tuples) start to take up space, leading to table bloat.

The MVCC system has some advantages, but it’s fair to say that it is not one of Postgres' most brilliant mechanisms. It’s definitely one of our least favorite things about Postgres, and we’re not alone.

So, table bloat primarily occurs in two ways:

When a table contains a large number of dead or unused tuples

When a table has a bunch of allocated pages and the tuples inside those pages are heavily fragmented

Why Is Table Bloat an Issue?

Thanks for the question.

There are several reasons why table bloat should be on your radar if you’re scaling PostgreSQL or running large transactional PostgreSQL tables:

Table bloat causes performance degradation. Dead tuples get stored alongside live tuples. During query execution, PostgreSQL has to sift through more data blocks, many of which are filled with dead tuples, causing increased I/O and slower queries.

Indexes also become bloated, leading to even slower lookup times. Indexes, at their core, are data structures that provide a fast path to rows in a table based on the values of one or several columns. When rows are updated or deleted in a table, the associated indexed values are not immediately purged from the index. Instead, similar to the MVCC mechanism for tables, these outdated index entries are marked as invalid but are not immediately removed. Over time, as more rows are modified and deleted, these "dead" index entries accumulate and contribute to index bloat.

Wasted disk space. Dead tuples and excessive page allocations also cause your disk usage to be higher than needed, meaning more infrastructure costs.

Operational challenges. Everything slows down if your table is bloated, as it’s essentially larger—e.g., if you're using replication, bloated tables can cause replication lag since the replication process needs to handle more data than necessary; backing up a bloated table takes longer and requires more storage; restoring from a backup can become more time-consuming; and so on.

What Increases Table Bloat?

As we said previously, table bloat is, unfortunately, a natural consequence of how PostgreSQL manages data. But it is also true that certain workload patterns lead to more bloat than others, for example:

High volume of UPDATEs/DELETEs. Tables with frequent updates can quickly accumulate these dead rows since when a row is updated in Postgres, a new version of the row is created, and the old version is marked as "dead". Similarly, when rows are deleted, they aren't immediately removed from the table. Instead, they're marked for deletion and later removed by the autovacuum process (more about autovacuum later).

Bulk INSERTs followed by DELETEs. Batch operations, like inserting a large number of rows followed by deleting many of them, can lead to rapid bloat accumulation. This is especially true if these operations are frequent and autovacuum doesn't have enough time or resources to clean up between these batches.

Long-running transactions. Long-running transactions can prevent dead rows from being vacuumed. Until a transaction is complete, the rows that were modified or deleted during that transaction cannot be vacuumed, even if they're no longer needed. This can lead to a temporary buildup of bloat.

How to Monitor Table Bloat

Before starting to talk about how to reduce bloat, you’ll want to know how your tables are doing bloat-wise. One of the most popular and efficient tools for this purpose in PostgreSQL is pgstattuple:

CREATE EXTENSION pgstattuple;

Once installed, you can use the pgstattuple function to get an overview of a particular table:

SELECT * FROM pgstattuple('your_table_name');

This query will return several key metrics:

table_len: Total size of the table/index.tuple_count: Number of tuples (rows) in the table.tuple_len: Total size of all tuples.dead_tuple_count: Number of dead tuples, which contribute to bloat.dead_tuple_len: Total size occupied by dead tuples.

With these metrics in hand, you can calculate the percentage of bloat as follows:

(bloat_size / table_size) * 100

Where:

bloat_size=table_len-tuple_lentable_size=table_len

Apart from pgstattuple, you can follow other methods to monitor your Postgres bloat, for example:

pg_stat_user_tablesprovides statistics about accesses to user tables, which can help indirectly identify bloat. If you observe thatseq_scanis increasing for a table, it might be an indication that the table's indexes are not performing optimally due to bloat.There are several custom scripts and third-party tools that utilize system views and tables to estimate table bloat. For instance, the check_postgres script provides a bloat option to measure and report table and index bloat.

Several graphical user interface tools and platforms, like pgAdmin, offer built-in features or integrations to monitor table and index bloat visually.

While monitoring bloat, remember that not all dead tuples are an indication of inefficiency—the presence of some dead rows is a natural consequence of PostgreSQL's MVCC design. It's the excessive accumulation of dead rows that needs attention. To determine what “excessive” means for your particular table will require some observation.

Reducing Table Bloat: Meet VACUUM

Now, it’s time to talk about how to minimize bloat as much as possible.

Vacuum is a maintenance operation in PostgreSQL that does the following:

Cleans dead tuples

Updates data statistics to optimize the query planner

Updates the visibility map to speed up indexes

As you can see, vacuum is essential for so many things! For this article, we'll stick to number one, a.k.a. how vacuum reduces table bloat by removing dead tuples.

To understand vacuum, a handy analogy is garbage collection in dynamic programming languages. The concept is similar, although different—in PostgreSQL, the dead tuples that get vacuumed don't get freed to your operating system. A table will still own the memory allocated in a page, but removing the dead tuples allows PostgreSQL to reuse that memory.

To manually run vacuum, execute VACUUM to vacuum every table and materialized view in the current database. Alternatively, we can provide a table name to run vacuum only on a single table, VACUUM [table_name].

But as we’ll see later in the post, PostgreSQL comes with a background service, enabled by default, that automatically runs vacuum for us when a vacuum_threshold is reached called autovacuum. Configuring autovacuum is a big part of managing bloat effectively once your tables start getting large.

But what about bloat caused by fragmented pages?

Vacuum fixes our first offender of table bloat by reclaiming dead tuples. But what about the second offender, fragmented pages? Vacuum by itself won't reorder tuples in pages to defragment them. It also won't delete pages that become unused by a table.

You could accomplish that by running VACUUM FULL, but this function might cause you bigger problems. VACUUM FULL not only reclaims the space from dead tuples but also compacts the table and attempts to reduce its size on disk. It creates a new copy of the table, copying over only the live tuples, and then replaces the old table with the new one. This operation releases the reclaimed space back to the operating system.

The main problem with VACUUM FULL is locking. A plain VACUUM operation is non-blocking; it doesn't lock out writers (INSERT, UPDATE, DELETE operations) when it's running. On the contrary, VACUUM FULL introduces an ACCESS EXCLUSIVE lock, which prevents anyone from reading or writing to the table. In a production-grade app, this would cause an outage!

To solve our excessive page issue, you could consider using an alternative tool like pg_repack.

VACUUM considerations

Running vacuum is not free. To quote the official PostgreSQL documentation, "VACUUM causes a substantial increase in I/O traffic, which might cause poor performance for other active sessions." Since VACUUM can be I/O-intensive, it's essential to schedule it during periods of low database activity or off-peak hours to minimize its impact on other operations.

Achieving the right frequency and type of vacuuming is a balancing act. Vacuum too often, and you risk slowing down the system with excessive I/O operations. Vacuum too infrequently, and the accumulation of dead tuples can degrade performance and consume disk space. Maintaining a healthy vacuum cadence requires a balancing act many PostgreSQL users critique.

As we’ll see in a minute, PostgreSQL has an autovacuum process that runs in the background, trying to automate the cleanup of dead tuples. But it's still essential to monitor and potentially adjust its settings, ensuring it's not too aggressive or too lax.

Configuring Autovacuum for Optimal Bloat Management in Large Postgres Tables

PostgreSQL comes with a background service called autovacuum that automatically runs vacuum for you when a vacuum_threshold is reached. We’ll talk more about this parameter later.

Fine-tuning autovaccum's configuration is crucial in reducing and maintaining table bloat. Since PostgreSQL 8.3, autovacuum is enabled by default in all PostgreSQL databases, but the default configuration is very conservative. This was set this way to support legacy systems. If you have large PostgreSQL tables with frequent updates and deletes, you most likely should change the autovacuum default configuration.

To do so, you have the option to change the autovacuum configuration globally for the whole PostgreSQL instance through the postgresql.conf file. It can be found by executing the SQL SHOW config_file or psql -U postgres -c 'SHOW config_file' from the terminal.

Alternatively, you can change the autovacuum configuration per table—this is the recommended route. Each table in your database will have different dead tuple activity, therefore benefiting from different autovacuum settings.

Increasing autovacuum frequency in large PostgreSQL tables

One of the critical decisions autovacuum must make is when to initiate the vacuuming process for a particular table. As we mentioned previously, this decision is guided by the vacuum_threshold, which is dynamically calculated using the table's characteristics and some pre-set configurations.

The formula for calculating the vacuum_threshold is:

vacuum_threshold = autovacuum_vacuum_threshold + autovacuum_vacuum_scale_factor * number of tuples

Where:

autovacuum_vacuum_threshold: This configuration value sets a baseline for the minimum number of tuples that must be updated or deleted before vacuuming is considered for a table. Its default value is 50, meaning that even if no specific percentage of the table has changed, once 50 tuples are modified, it's worth considering a vacuum operation.

autovacuum_vacuum_scale_factor: This is a multiplier that ties the decision to vacuum to the size of the table. It determines the percentage of the table (in terms of tuples) that needs to be updated or deleted to trigger a vacuum. With a default value of 0.2, or 20%, it implies that if one-fifth of a table's tuples are dead, it becomes a candidate for vacuuming.

You might need to reconfigure autovacuum_vacuum_scale_factor for large tables if table bloat is growing more than expected. But to understand how, it’s worth digging a little into the implications of how the scale factor works.

The key is that autovacuum_vacuum_scale_factor, being a percentage, scales with the table size. For example, with a default scale factor of 0.2 (20 %), a table containing just 100 rows would require only 20 changes (either updates or deletions) to trigger autovacuum. This seems quite reasonable.

However, consider a substantially larger table with one million rows. Applying the same 20 % scale factor means a whopping 200,000 changes would be required before autovacuum steps in. During this time, dead tuples (remnants of old, deleted, or updated data) could accumulate considerably.

To counter this, you might consider reducing the autovacuum_vacuum_scale_factor for larger PostgreSQL tables. By setting it to a smaller decimal, like 0.01, it means autovacuum would be initiated after just 1 % of the table has changed. In the context of our one-million-row table, this means after 10,000 changes, not 200,000.

To change autovacuum_vacuum_scale_factor for a single, large table, you would run something like this:

ALTER TABLE [table_name] SET (autovacuum_vacuum_scale_factor = 0.01);

While you might be tempted to set a very low scale factor to keep tables pristine, remember that vacuuming operations also consume system resources. Striking a balance is vital, as we said previously. The goal is to minimize table bloat without causing undue strain on the system by vacuuming too frequently.

Autovacuum troubleshooting in Postgres

At times, even after tweaking parameters like the autovacuum_vacuum_scale_factor, you might encounter issues where autovacuum still can't keep up with table bloat, or it’s slower than expected. Let's delve into some reasons why this might occur and the potential solutions.

Cost-based vacuum delay

PostgreSQL incorporates a mechanism to limit the impact of resource-intensive operations like vacuuming and analyzing to avoid disrupting regular database activities. This mechanism is governed by a cost limit counter. When operations accrue a "cost" that reaches this predefined limit, the associated process is temporarily stopped to let other operations proceed.

If this is happening, you can try the following solutions:

Turning off autovacuum_vacuum_cost_delay. By setting this parameter to 0 for a specific table, you can effectively disable this delay, allowing the vacuum process to run without interruptions.

ALTER TABLE [table_name] SET (autovacuum_vacuum_cost_delay = 0);

Increasing the cost limit. If turning off the cost delay feels too aggressive, you can instead increase the

autovacuum_vacuum_cost_limitto let autovacuum process more tuples before taking a break. The default isvacuum_cost_limit, which is 200.

ALTER TABLE [table_name] SET (autovacuum_vacuum_cost_limit = 10000);

Handling very large PostgreSQL tables

For particularly large PostgreSQL tables, where the sheer volume of dead tuples is vast, even optimized autovacuum processes might lag. Here, PostgreSQL's ability to run multiple concurrent autovacuum processes comes in handy.

By default, PostgreSQL initiates three autovacuum processes. However, on systems with cores to spare, this number can be increased by raising autovacuum_max_workers to spin up more autovacuum processes.

Unfortunately, unlike the parameters discussed previously, adjusting this one is not as straightforward:

The change can't be made using an

ALTER TABLEcommand. It requires modification in thepostgresql.conffile.PostgreSQL requires a server restart for the change to take effect.

Table Bloat and Postgres Partitioning

Table partitioning is an excellent way of keeping large PostgreSQL tables performant, as we explain here. Timescale helps you to do this automatically via hypertables.

How Postgres partitioning reduces table bloat

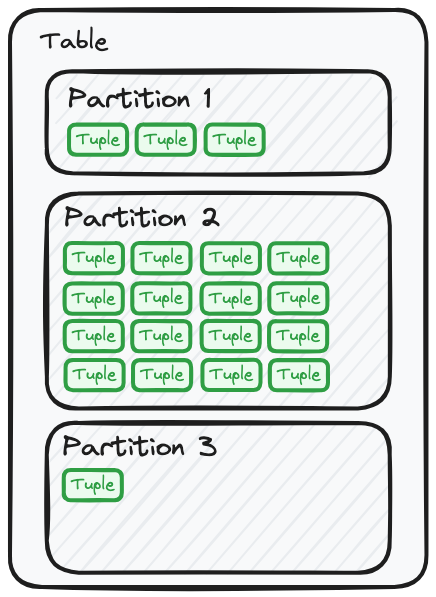

A not commonly discussed benefit of partitions relates to table bloat. Partitions introduce a more efficient method to handle bloat, especially in scenarios involving time-series or bulk data operations. Partitioning essentially divides a table into smaller, more manageable child tables (even if, from a user perspective, they still appear to be a single table).

When operations are performed on a partitioned table, only the relevant partition is impacted. For instance, when inserting data for a specific month in a time-series table, only the partition corresponding to that month is written to. This localized approach can significantly reduce the generation of dead tuples across the entire table.

One of the most compelling advantages of using partitions in terms of reducing bloat is the way deletions are handled. Instead of manually deleting rows, which generates dead tuples, using partitioning allows you to drop entire partitions when data is no longer needed. In terms of reducing bloat, this operation is swift because it involves the removal of a physical file from the storage system. There's no creation of dead tuples.

Also, regular insertions, updates, and deletions can cause table fragmentation over time, but with partitions, each partition maintains its own physical storage, leading to lower fragmentation levels compared to monolithic tables.

Uneven data distribution and table bloat

Just like anything, Postgres partitioning also comes with some nuances. When working with partitioned tables, one must be wary of unbalanced data distribution among the partitions. A well-organized partitioning scheme ensures that data is evenly spread across partitions, but missteps in partition management (e.g., forgetting to create a new partition) can result in uneven data distribution, which has its own set of issues. (Another benefit of using hypertables as your partitioning method is that they avoid these issues from happening.)

An example of uneven distribution in partitioned tables

If one partition becomes a hotspot because of uneven data distribution, it can lead to frequent updates or deletes within that specific partition. This can result in a disproportionate number of dead tuples in that partition compared to others, thus increasing bloat.

If data is unevenly distributed, certain partitions may be vacuumed more frequently, consuming more resources and potentially impacting performance. Conversely, underpopulated partitions might be vacuumed less frequently, letting them accumulate dead tuples over an extended period.

Uneven data distribution can also lead to a phenomenon similar to fragmentation. While it might not be bloated in the traditional sense of dead tuples occupying space, fragmented free space within partitions can be inefficient.

An overloaded partition might see frequent insertions and deletions, leading to free spaces scattered throughout. This can make it harder for PostgreSQL to effectively use this space for new data, causing the partition to grow in size unnecessarily.

Making things easier with hypertables

To minimize the issues related to partition management and bloat, consider using Timescale's hypertables. You’ll no longer need to worry about the uneven distribution downsides laid out above. Since hypertables automatically create partitions (called “chunks'' in Timescale), we don't need to worry about forgetting to create partitions. Time-series data is also effective at fairly evenly distributing itself. Similarly, setting up a data retention policy is an excellent way to drop old chunks automatically, reducing the size of your database without table bloat issues.

In terms of reducing bloat in hypertables by fine-tuning autovacuum, you can apply different autovacuum considerations on a by-chunk basis. Chunks (partitions) are child tables under the hood and, therefore, can be configured independently, allowing you to add more aggressive autovacuum frequencies for those chunks with more frequent updates (e.g., most recent chunks).

To do so, start by retrieving a list of all chunks associated with a hypertable. This will give you an overview of the chunks you might want to configure:

SELECT show_chunks('[hypertable_name]');

Once you have identified the chunks that require configuration adjustments, you can change the autovacuum settings for each chunk. The process is the same as changing configurations for individual tables in PostgreSQL—a.k.a. you can apply the advice we covered in the previous section:

ALTER TABLE [chunk_name] SET (autovacuum_vacuum_cost_limit = 10000);

Conclusion

Table bloat, the often-unseen adversary of optimal PostgreSQL performance, can sneak up and eat up the efficiency of your large tables. By understanding how vacuum works and by fine-tuning your autovacuum parameters, you will be able to keep table bloat in check.