Understanding RDS Pricing and Costs

Amazon Web Services (AWS) offers a vast range of services, including the Relational Database Service (RDS), a fully managed database service for more straightforward setup and operation of relational databases in the cloud.

However, navigating AWS pricing can be challenging; and especially complex and misunderstood for RDS.

We are building Timescale in AWS and can relate to the issue of unpredictable billing. More importantly, many of our potential customers come from Amazon RDS for PostgreSQL, so we hear countless stories about RDS costs getting mysteriously too high. So, we’ve become quite the experts in RDS pricing.

If you’re considering using RDS, grasping RDS pricing will be crucial to controlling your cloud costs and maximizing your investment. Understanding the numerous variables affecting the final price will allow you to make informed decisions about your database infrastructure.

Drawing from our experience, we will break down RDS pricing in this blog post so you can start understanding and slashing your bills. Our area of expertise is RDS PostgreSQL, so we’ll center this article around it—but you could apply a similar framework to other RDS relational databases.

The Challenges in Understanding RDS Pricing

The RDS PostgreSQL pricing formula builds upon two essential components:

- Database instances

- Database storage

Additionally, your bill will include extra charges for the following:

- Backup storage

- Snapshot export

- Data transfer

- Technical support

- Multi-Availability Zone (AZ) deployments

- And other extra features (like Amazon RDS Proxy for connection pooling and Amazon RDS Performance Insights for performance diagnostics)

Lastly, RDS offers two billing options: on-demand and reserved instances. On-demand instances are billed per hour with no long-term commitments. Reserved instances require a one- or three-year commitment but offer significant discounts compared to on-demand pricing.

We’ll focus on on-demand instances for simplicity, but a similar pricing model would apply to your reserved instances.

Now, let’s break down each one of these elements.

Estimating Your RDS Pricing

RDS instance types

Let’s start with RDS database instances. This bill element defines the resources allocated to your database, including CPU and memory. The more resources you provision, the higher the cost. To estimate RDS pricing accurately, you’ll have to consider the resources your workload requires while selecting the instance size that works best for you.

RDS PostgreSQL offers a vast catalog of instances. Depending on your workload's needs, you can pick:

- Newer generation RDS instance types, like the AWS Graviton-based instances. These offer better performance at lower costs than previous generations.

- T-family instances. They are most suitable for fluctuating workloads with periods of low activity followed by sudden spikes. These instances accumulate burst credits during low utilization, which can be used during high-demand periods, allowing cost savings while maintaining performance during traffic spikes.

- Memory-optimized instances. You should consider them for applications requiring loading large numbers of rows into memory. These often provide better performance for data-intensive applications.

- Typically, if you don’t have special instance needs, general-purpose (M-family) instances are sufficient; they offer a balanced mix of compute, memory, and storage resources for various workloads.

RDS instances are billed per hour, and the price varies per region, so select your region appropriately when evaluating this cost.

Also, as mentioned earlier, instances in RDS are single-AZ per default. You'll be charged extra if you prefer a multi-AZ deployment with one or two standbys for increased availability.

Our tip: Managed databases make it easy to scale up resources as you grow. If you’re unsure how much CPU or memory you’ll require, it’s better to start small, run some tests, and scale up if necessary. It could save you some money!

RDS storage

RDS database storage is charged per GB provisioned. The price per GB varies per region and increases for multi-AZ deployments.

RDS also allows you to pick between different storage types, each with its own price:

- General Purpose (SSD) Storage: this option suits most workloads and offers a cost-effective solution with a solid performance baseline. You can choose between two SSD storage types, gp2 and gp3, with gp3 being the latest generation in this family. It provides a baseline performance of 3,000 IOPS and 125 MiBps up to 64,000 IOPS and 4,000 MiBps, depending on the storage provisioned. It delivers 99 % storage consistency and is ideal for small to medium-sized databases, development environments, and test instances.

- Provisioned IOPS (SSD) Storage: this storage type is designed for I/O-intensive applications that require consistent, high-performance storage. This storage type is ideal for large-scale, mission-critical applications with demanding workloads, such as high-transaction-rate OLTP systems or analytics applications. It enables you to provide a specific number of IOPS, providing more predictable performance and improved storage consistency of 99.9 %.

Lastly, Amazon RDS allows you to select from 20 GiB to 3 TiB of associated magnetic storage capacity for your primary data set for backward compatibility.

Our tip: In our experience, most applications do very well with General Purpose gp3 Storage (we certainly recommend it over gp2). If you want to get specific, you can also evaluate your application's performance requirements and consider factors like read/write patterns, IOPS, and storage consistency. If your workload demands consistent high performance and requires greater storage consistency, Provisioned IOPS may be worth the additional cost. But make sure to test the performance of gp3 volumes first—don’t underestimate them.

Backup storage

In RDS, there is no additional charge for backup storage up to 100 % of your total database storage for a region, but if you need additional storage for your backups, it will be billed at $0.095 per GiB-month.

By default, AWS retains seven-day backups, but you can modify the retention period up to 35 days if required.

Snapshot export

Manual snapshots allow you to create point-in-time copies of your database instances into your own Amazon S3 bucket. These snapshots can be retained indefinitely and are billed separately from the automated backups. Exporting snapshots to S3 is a potential long-term storage strategy since it is less expensive, and snapshots also require less space due to the conversion to the Parquet format.

However, you will pay for data transfer costs from RDS to S3. These will be charged per GB of snapshot size, and the price per GB varies per region.

Data transfer

Data transfer costs are another factor to consider. Understanding the various data transfer scenarios and associated costs will help optimize your expenses.

AWS provides 100 GB of free data transfer out to the internet per month, aggregated across all regions. Data transfer within the same AWS region and AZ, such as between RDS and EC2 instances, is generally free. However, transferring data between different AWS regions incurs costs. This cost varies depending on the destination region.

Data transfer costs are not limited to the running instance either. If you have any process that moves snapshots between regions, these transfers are also subject to data transfer costs.

Technical support

For a production database, you’ll most likely want to account for one of AWS’s Support Plans to get technical help when something goes wrong.

You can pick between different Support tiers, offering various levels of support:

- Developer Support: only recommended for testing or early development.

- Business Support: lower-grade possible recommended for production workloads.

- Enterprise On-Ramp: recommended if those production workloads are mission-critical.

- Enterprise Support: delivers quicker response times in case of a major failure.

Our tip: If your database is user-facing or mission-critical, don’t save up on database support! Hopefully, your production database won’t fail often, but in our experience, database issues are inevitable. When your entire platform is down due to a database failure, you’ll be thankful for being able to issue a ticket or get on the phone with somebody who can help you.

Beware of Potential Pricing "Gotchas"

Calculating pricing explicitly

We recommend using the AWS Pricing Calculator to estimate all these costs as accurately as possible. Still, beware of potential pricing “gotchas” that can impact your overall costs—this is vital when estimating RDS pricing. These factors may take time to become apparent but can significantly impact your expenses as your traffic and data storage requirements grow.

Remember that your initial cost estimations are based on traffic and data storage assumptions. As these increase, your costs will rise. It's essential to monitor storage and traffic and set up billing alarms to alert you when you're in danger of exceeding your monthly budget.

Similarly, as your data volume grows, you may find yourself in need of more CPU/memory to avoid performance degradation: make sure you have a sustainable path for scalability, especially if you’re dealing with mission-critical, data-intensive applications.

Your CPU, memory, and storage will be the main contributors to your RDS bill—but keep an eye on the “small things.” For example, your backups, snapshots, and data transfer fees can add up. Don’t forget you’ll also need to pay extra for RDS Proxy (if you need connection pooling) or to retain performance insights for long-term observability.

Similarly, another factor to consider is support costs. AWS support is priced separately and not included in your RDS pricing calculations. Support plans can range from $100 to $15,000 per month, depending on your needs, and with Enterprise Support starting at $5,500 per month, it can become a high cost. Evaluate your requirements and choose a support plan that fits your needs and budget.

Simplified Pricing for Time-Series Data

If you prefer a high-performance PostgreSQL solution in AWS with a pricing model that you can actually understand, Timescale can help. As a company trying to serve customers who have migrated successfully from RDS to Timescale (or looking into it), we fully recognize the challenges they face when navigating complex RDS costs.

This is why we choose to provide a simplified pricing model: our bill only has only two elements, compute and storage, making your Timescale bill transparent and predictable.

How RDS Costs Spike

Amazon RDS pricing is generically based on the instance type you choose, your region, and the amount of storage and data transfer. Let’s see what can make your costs soar.

Compute capacity

One of the pricing models available for Amazon RDS for PostgreSQL is on-demand instances. With on-demand instances, you pay for the compute capacity you use by the hour. This option suits applications with short-term or unpredictable workloads or for testing and development environments.

However, a spike in compute capacity may lead you to scale instance size and leave it scaled, resulting in wasted spend. On the other hand, you may not be using the most efficient instance type for your workload—a.k.a., you’re paying for resources you don’t need.

Increased data transfer

Data transfer costs are incurred when data is transferred between your database and other AWS services or the Internet. Data transfer to the Internet comes with a price per GB depending on the zones you select and the data transferred.

You can reduce data transfer costs by choosing a region close to your users and other AWS services. For example, you may incur higher data transfer costs if you have users in Europe and your database is hosted in the U.S.

In this case, hosting your database in Europe may be more cost-effective. It is also essential to consider the amount of data you transfer, as prices may vary depending on the amount of data transferred. However, to minimize costs, you must always reduce the data and queries you send to the Internet.

Provisioned IOPS spend

Besides different data transfer costs depending on the region, RDS charges additional fees for two of its main storage options (General Purpose Storage and Provisioned IOPS Storage). As we’ve seen in our previous article, provisioned IOPS spend, a type of storage designed for I/O-intensive applications, can be quite expensive.

As an example, you’ll pay $0.10 per IOPS per month for a single AZ deployment in the U.S. East (Ohio) region. This is why we highly recommend you first test the performance of General Purpose gp3 Storage (which is much better than gp2 volumes), as only highly intensive workloads will need IOPS.

Runaway environments

And since we’ve talked about testing, ensure you are monitoring your team’s non-production environment spend, as you could be wasting a lot in AWS charges you don’t need.

Reducing Amazon RDS Costs

Now that you know what makes your RDS service's cost unbearable, it’s time to get your team together and see where and how you can implement optimizations or judicious cuts.

Here are a few tips.

Get visibility into the problem

Optimize and tune your usage (more on that later) based on actual performance. The concept is simple: to properly optimize your RDS spending, you need to tag your resource utilization and learn where the money is actually going. Effectively tagging and tracking your database resources usage will aid you in assigning billing to where it is needed the most.

With CloudWatch, you can get expense reports and decide how to manage your resources and who can access them.

You can tag the following resources:

- Database instances

- Database clusters

- Read replicas

- Database snapshots

- Database cluster snapshots

- Reserved database instances

- Event subscriptions

- And more

To tag your instance, use the Tags Tab on the AWS RDS console and add the tags to categorize your resources and begin tracking their consumption.

Setting up AWS billing alarms is another effective measure to save on RDS costs. You can enable these in the CloudWatch Billing and Cost Management console to help you monitor your estimated RDS cost using billing metric data.

Optimize your non-production environments

Use automation tools, such as the AWS Systems Manager, to find and enforce constraints on RDS instances in non-production stacks. You can find these via an enforced tagging scheme. Simply add an environment tag to all your RDS databases (and terminate those that don’t).

On non-prod stacks, you can also issue violations or automate corrections to instances using extra large instance sizes, provisioned IOPS, or other costly services outside production.

Use the correct instance sizes

Size definitely matters when it comes to RDS instances. If you’re looking to bring down costs, you need to right-size your instance so you’re not paying for resources you don’t need or experiencing performance issues.

Here are the steps you should take to use the correct RDS instance size:

- Right-size your instance: There are five types of instance series in RDS: T and M (general-purpose instances) and R, X, and Z (memory-optimized instances). To choose the suitable instance series, you need to know your database memory use, CPU, EBS Bandwidth, and the network performance supported by your instance type. You can get this information by monitoring your database using CloudWatch metrics. With this information, you can choose the right instance family.

- Determine the instance's performance: Instances with lower CPU utilization (less than 40 percent over four weeks) can be downsized to a lower instance class. Monitoring your CPU consumption with CloudWatch can help unveil your instance performance, leading to low-read IOPS. In this case, you can optimize costs by changing the M series instance to the R series, ensuring you get the same memory for half of the CPU usage. Reducing CPU—more expensive than memory—can save you tons of money.

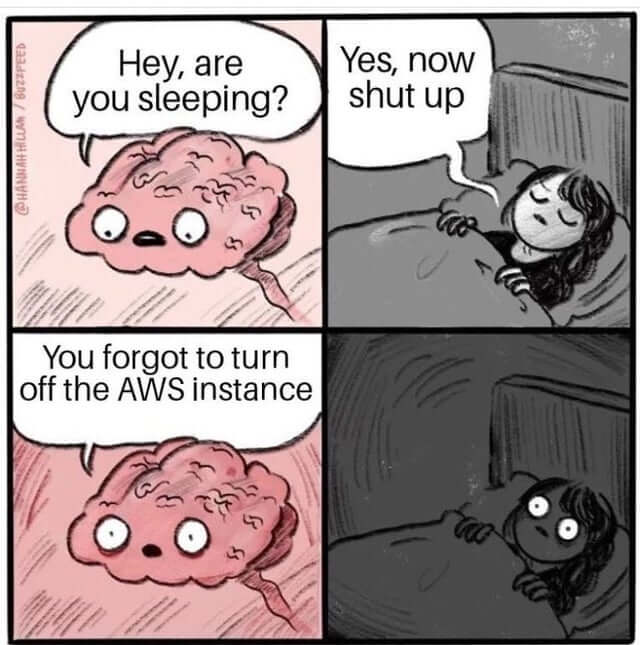

- Turn off idle instances: You can save money by turning off your RDS instances when they are not in use. For that, you need to monitor when all your instances are being used and ensure they are turned off if they are no longer functional. Keep in mind that you will still pay for the storage and snapshots you used.

Purchased reserved instances

A fuss-free way to save money on RDS usage is by paying your reserved instances upfront. These are one-to three-year contracts that can shave off costs (starting at 42 percent for one year) compared to the on-demand option, especially if you opt for the longer contract and pay upfront.

Still, unless you take the time to right-size your instances, your bill won’t reflect such savings.

Plan your data transfer routes carefully

As we’ve seen earlier, using AWS RDS has numerous scenarios associated with data transfer costs. But you can bypass some of these fees by planning your data transfer routes and regions very carefully:

- Use Multi-AZ only when necessary: Multi-AZ deployment provides high availability and automatic failover in case of a database instance failure. However, it also comes at an additional cost. If high availability is not critical for your workload, consider using single deployment to reduce cost.

- Disable Multi-AZ: A more drastic option is creating a secondary database instance on another AZ to synchronously replicate the data from the main engine in a different zone. This will increase the availability of your database in the event of a failure of the primary database. When a failure occurs, the RDS service moves to the secondary database. While this makes your RDS service highly available, all these hardware costs are equally high. If you are in a development environment, disabling the feature is probably a good idea to save money.

- Remove backup for non-critical RDS: For those trying to optimize costs, the automated backups of your Multi-AZ database cluster created by Amazon RDS during the backup window of your database instance should be reviewed. When created, the automated backups and manual database snapshots are stored in your Amazon RDS backup storage for each AWS region. If you don't need a backup, you can save costs by removing it—but remember that AWS will only charge for backup storage that exceeds the total database storage for a region. However, if you do need a backup, make sure only to remove automatically created database backup snapshots based on your organization’s needs while retaining the manual backup for critical RDS.

- Reduce your data transfers: Attempt to reduce traffic to the Internet as much as possible to reduce cost. Inbound traffic is typically free, but you will incur charges when it is outbound. You can reduce costs by minimizing the data flow between AZs and across regions by keeping your data within the same AZ or region, which is free. Another option is using a cheaper region or AZ and reducing your data internally in the business layer before sending it raw to the Internet. You can also compress it before sending it out.

- Consider using CloudFront for sending data out to the Internet: Why? Its transfer costs are cheaper. Using CloudFront for your most active assets to the AWS Edge locations will deliver such services faster to end users. Data transfer into AWS CloudFront from the Internet or AWS is free, while data transfer from AWS CloudFront into the Internet incurs charges.

Optimize database performance

Optimal database performance can keep your RDS instances (and bill) small. You can achieve this by optimizing indexing and database sanitation to help with I/O. Using read replicas can also make your very heavy workload perform better and get reads from your database faster, providing improved scalability and durability.

Should you host yourself on EC2?

Some teams might be inclined to host their own databases on EC2 because it gives them complete control and flexibility over their system, including the OS and database. You can install any database engine and version of your choice and have control over the updates, patches, and maintenance windows.

You can also choose whether to run one or multiple instances on the same EC2 instance and the ports used. However, you’ll also have to implement the management support RDS gives you, thereby increasing daily overhead.

Controlling Costs for Large Workloads (Like Time-Series Projects)

If you use Amazon RDS to manage time-series data, Timescale provides a better alternative with the best price performance.

We engineered PostgreSQL to make time-series data calculations simpler and more cost-effective. Want to learn more? Reach out and ask about our pricing estimator.

You can host all types of PostgreSQL workloads in Timescale. But especially if you have time-series data, Timescale will suit you better than RDS. Plus, by choosing Timescale, you’ll enjoy the following benefits:

- Timescale is PostgreSQL++ (simpler, faster, and more cost-effective). If you work with time-series data, you know how quickly your data will grow—avoid hitting that scalability wall, and definitely don’t be scared to pay for top performance every time you check your monthly bill. With Timescale’s new usage-based database storage model, you only pay for what you store.

- Enhanced performance with less compute and storage resources, thanks to specialized optimizations tailored for time series and analytics workloads. For a 1 TB dataset with almost one billion rows, Timescale outperforms Amazon RDS for PostgreSQL, with up to 44 percent higher ingest rates and queries running up to 350x faster. In practice, you’ll need less compute power in Timescale than in RDS and can scale sustainably without hindering performance.

- Substantial storage cost savings vs. RDS: our advanced compression algorithms enable a remarkable 90 percent reduction in disk storage. You can tier your older data to object storage built on S3 for an extra savings boost while remaining fully queryable.

- Expert technical support is included in our pricing, ensuring you receive the assistance you need whenever you need it. Learn how we're revolutionizing hosted database support at no extra cost.

Sign up for a free Timescale trial today and experience our PostgreSQL platform's simplicity, efficiency, and outstanding performance—without breaking the bank.

This blog post was originally published in April 2023 and updated in February 2024.