Hi Dear Timescale team!

I copied table from prod, switch on compression, compress all chunks. Then I added new records to last compressed chunk and try to recompress it but id failed with fatal error that the database system is in recovery mode.

Then I tried to reproduce this issue on test table and I could not achieve the result with an error ![]() .

.

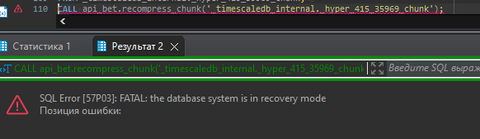

Screenshot of fatal error:

Serever`s logs:

2023-09-27 10:02:01 Session_ID=6513d369.28b54 postgres@postgres from 127.0.0.1 FATAL: 57P03: the database system is not yet accepting connections

2023-09-27 10:02:01 Session_ID=6513d369.28b6f postgres@postgres from 127.0.0.1 FATAL: 57P03: the database system is not yet accepting connections

2023-09-27 10:02:01 Session_ID=6513d369.28b83 postgres@postgres from 127.0.0.1 FATAL: 57P03: the database system is not yet accepting connections

2023-09-27 10:54:07 Session_ID=650f2f5e.3458da @ from LOG: 00000: server process (PID 459244) was terminated by signal 11: Segmentation fault

2023-09-27 10:54:07 Session_ID=650f2f5e.3458da @ from DETAIL: Failed process was running: CALL api_bet.recompress_chunk('_timescaledb_internal._hyper_415_35969_chunk')

2023-09-27 10:54:07 Session_ID=650f2f5e.3458da @ from LOCATION: LogChildExit, postmaster.c:3766

2023-09-27 10:54:07 Session_ID=650f2f5e.3458da @ from LOG: 00000: terminating any other active server processes

2023-09-27 10:54:07 Session_ID=650f2f5e.3458da @ from LOCATION: HandleChildCrash, postmaster.c:3505

2023-09-27 10:54:07 Session_ID=6513df9f.80f69 [unknown]@[unknown] from 256.256.256.256 LOG: 00000: connection received: host=256.256.256.256 port=99999

2023-09-27 10:54:07 Session_ID=6513df9f.80f69 [unknown]@[unknown] from 256.256.256.256 LOCATION: BackendInitialize, postmaster.c:4403

2023-09-27 10:54:07 Session_ID=6513df9f.80f69 user@db from 256.256.256.256 FATAL: 57P03: the database system is in recovery mode

2023-09-27 10:54:07 Session_ID=6513df9f.80f69 user@db from 256.256.256.256 LOCATION: ProcessStartupPacket, postmaster.c:2412

2023-09-27 10:54:07 Session_ID=650f2f5e.3458da @ from LOG: 00000: all server processes terminated; reinitializing

But if to use decompress and then compress chunk then it works perfectly:

SELECT api_bet.decompress_chunk('_timescaledb_internal._hyper_415_35969_chunk');

SELECT api_bet.compress_chunk('_timescaledb_internal._hyper_415_35969_chunk');

The reproduce code (but it does not fail):

DROP TABLE IF EXISTS test.hypertable;

CREATE TABLE test.hypertable(id int, created_at timestamp, val smallint);

SELECT * FROM api_bet.create_hypertable('test.hypertable', 'created_at', chunk_time_interval => INTERVAL '1 month');

ALTER TABLE test.hypertable

SET (

timescaledb.compress,

timescaledb.compress_orderby = 'id ASC, created_at DESC',

timescaledb.compress_segmentby = 'val'

);

INSERT INTO test.hypertable

SELECT gs.id, TIMESTAMP '2023-01-01' + make_interval(secs => gs.id), (random() * 6 + 1)::int

FROM pg_catalog.generate_series(1, 1000000, 1) AS gs(id)

;

SELECT chunk_schema, chunk_name,

pg_size_pretty(total_bytes) as "Total",

pg_size_pretty(table_bytes) as "Table",

pg_size_pretty(index_bytes) as "Index",

pg_size_pretty(toast_bytes) as "Toast"

FROM api_bet.chunks_detailed_size('test.hypertable')

ORDER BY split_part(chunk_name, '_', 4)::int;

SELECT api_bet.compress_chunk(chunk) FROM api_bet.show_chunks('test.hypertable') AS chunk;

INSERT INTO test.hypertable

SELECT gs.id, TIMESTAMP '2023-01-12 13:46:40.000' + make_interval(secs => gs.id), (random() * 6 + 1)::int

FROM pg_catalog.generate_series(1, 1000000, 1) AS gs(id)

;

SELECT * FROM api_bet.chunk_compression_stats('test.hypertable')

--WHERE compression_status = 'Compressed'

;

DO $DO$

DECLARE

v_chunk_name regclass;

BEGIN

FOR v_chunk_name IN SELECT (chunk_schema || '.' || chunk_name)::regclass FROM api_bet.chunk_compression_stats('test.hypertable') WHERE compression_status = 'Compressed'

LOOP

CALL api_bet.recompress_chunk(v_chunk_name);

END LOOP;

END; $DO$;