Hi,

while trying to do some chunk size tests to figure out the best interval for our hypertable I stumbled upon something I couldn’t explain and hope to get some insights from experts on this topic.

Looking at the explain of this query it seems that the newer the chunk the slower the reading from it: BNbpL : Latest chunks are very slow | explain.depesz.com

Which seems counter-intuitive because if anything it should be the other way around, right? Statistics are more accurate (as you can see in the explain) and it’s maybe still in the cache. But even after several days of heavy usage of other tables the explain still looks the same, so it’s not a randomly slow or still cached file.

Notice that I did all these test with compression policy still disabled because otherwise it will just be fast and underlying problems with the table design may go unnoticed. And yes, these 7 day chunks turn out to be not the best interval for the table but I could see a similar trend with smaller chunks as well.

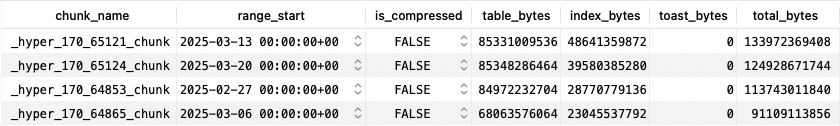

So this is not so much about the whole query being slow but more about an explanation of why some chunks are so much slower. And also why so much more data is read from them (MB vs. KB) while not only the amount of rows is roughly the same but the size on disk as well:

Data in the table was copied over from existing table via:

INSERT INTO test_table_7_days

SELECT *

FROM measurements

WHERE measured_at BETWEEN ... AND ...;

Am I missing something and this is explainable behavior? Would love to get some ideas on this.