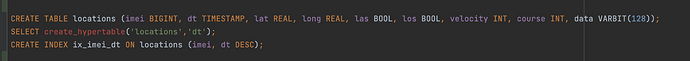

We are developing gps monitoring system, and using timescale to store time-series data from our devices

We have “locations” table, that stores location data from device. IMEI is device ID. We have created imei-dt index

We are selecting latest locations with array of imei:

SELECT distinct on (imei) * FROM locations WHERE imei IN (…) order by imei, dt desc

Everything was ok for a while, but when the data have reached 5M records, server started to crash. We are getting 40-100 load average on server.

pg_stat shows a lot of requests with ‘idle’ status

SELECT distinct on (imei) * FROM locations WHERE imei IN (867232054978003, 867232054980835, 867232054976544, 867232054978474, 867232054980538, 867232054980769, 867232054978268, 867232054980157, 867232054978664, 867232054978102, 867232054980173, 867232054978235, 867232054981015, 867232054981411, 867232054977989, 867232054978367, 867232054977864, 867232054980876, 867232054981544, 867232054981296, 867232054981213) order by imei, dt desc;

everything was ok with another array of imei, but with this one, Postgres doesn’t respond.

We have solved this problem with recreating index:

DROP INDEX ix_imei_dt

CREATE INDEX ix_imei_dt ON locations (imei, dt DESC);

But the problem returns after a few days.

So now we manually reindex the table every ~3days, because if we don’t, the load average raises at one time, and the server crashes

Here is our EXPLAIN:

Unique (cost=9.22…2863.56 rows=331 width=51)

→ Merge Append (cost=9.22…2844.52 rows=7613 width=51)

Sort Key: _hyper_1_4602_chunk.imei, _hyper_1_4602_chunk.dt DESC

→ Custom Scan (SkipScan) on _hyper_1_4602_chunk (cost=0.42…0.42 rows=331 width=41)

→ Index Scan using _hyper_1_4602_chunk_ix_imei_dt on _hyper_1_4602_chunk (cost=0.42…28.70 rows=1 width=41)

Index Cond: (imei = ANY (‘{867232054978003,867232054980835,867232054976544,867232054978474,867232054980538,867232054980769,867232054978268,867232054980157,867232054978664,867232054978102,867232054980173,867232054978235,867232054981015,867232054981411,867232054977989,867232054978367,867232054977864,867232054980876}’::bigint[]))

→ Custom Scan (SkipScan) on _hyper_1_4603_chunk (cost=0.42…0.42 rows=331 width=41)

→ Index Scan using _hyper_1_4603_chunk_ix_imei_dt on _hyper_1_4603_chunk (cost=0.42…28.42 rows=1 width=41)

Index Cond: (imei = ANY (‘{867232054978003,867232054980835,867232054976544,867232054978474,867232054980538,867232054980769,867232054978268,867232054980157,867232054978664,867232054978102,867232054980173,867232054978235,867232054981015,867232054981411,867232054977989,867232054978367,867232054977864,867232054980876}’::bigint[]))

→ Custom Scan (SkipScan) on _hyper_1_4606_chunk (cost=0.29…0.29 rows=331 width=41)

→ Index Scan using _hyper_1_4606_chunk_ix_imei_dt on _hyper_1_4606_chunk (cost=0.29…25.84 rows=1 width=41)

Index Cond: (imei = ANY (‘{867232054978003,867232054980835,867232054976544,867232054978474,867232054980538,867232054980769,867232054978268,867232054980157,867232054978664,867232054978102,867232054980173,867232054978235,867232054981015,867232054981411,867232054977989,867232054978367,867232054977864,867232054980876}’::bigint[]))

→ Custom Scan (SkipScan) on _hyper_1_4607_chunk (cost=0.43…0.43 rows=331 width=42)

→ Index Scan using _hyper_1_4607_chunk_ix_imei_dt on _hyper_1_4607_chunk (cost=0.43…28.74 rows=1 width=42)

Index Cond: (imei = ANY (‘{867232054978003,867232054980835,867232054976544,867232054978474,867232054980538,867232054980769,867232054978268,867232054980157,867232054978664,867232054978102,867232054980173,867232054978235,867232054981015,867232054981411,867232054977989,867232054978367,867232054977864,867232054980876}’::bigint[]))

→ Custom Scan (SkipScan) on _hyper_1_4608_chunk (cost=0.43…433.66 rows=331 width=42)

→ Index Scan using _hyper_1_4608_chunk_ix_imei_dt on _hyper_1_4608_chunk (cost=0.43…1681.96 rows=1911 width=42)

Index Cond: (imei = ANY (‘{867232054978003,867232054980835,867232054976544,867232054978474,867232054980538,867232054980769,867232054978268,867232054980157,867232054978664,867232054978102,867232054980173,867232054978235,867232054981015,867232054981411,867232054977989,867232054978367,867232054977864,867232054980876}’::bigint[]))

→ Custom Scan (SkipScan) on _hyper_1_4609_chunk (cost=0.43…0.43 rows=331 width=42)

→ Index Scan using _hyper_1_4609_chunk_ix_imei_dt on _hyper_1_4609_chunk (cost=0.43…28.43 rows=1 width=42)

Index Cond: (imei = ANY (‘{867232054978003,867232054980835,867232054976544,867232054978474,867232054980538,867232054980769,867232054978268,867232054980157,867232054978664,867232054978102,867232054980173,867232054978235,867232054981015,867232054981411,867232054977989,867232054978367,867232054977864,867232054980876}’::bigint[]))

→ Custom Scan (SkipScan) on _hyper_1_4610_chunk (cost=0.29…0.29 rows=331 width=41)

→ Index Scan using _hyper_1_4610_chunk_ix_imei_dt on _hyper_1_4610_chunk (cost=0.29…23.36 rows=1 width=41)

postgres version is 14.5

timescale is 2.8.1

we are using timescale/timescaledb:latest-pg14 docker