Hi,

I am actually trying Timescale (latest version on postgres 14 locally installed on my laptop with default settings) for one of my client and i got some issue about disk space.

I am actually inserting backup data’s row by row from a custom multithread C# application.

after few days on intense insert, I am running out of disk space (500Gb) while backup (mainly csv file) file size are < 1Gb in zipped file

When I summarize all chunk_compression_stats() information’s:

size=2817Mo and size_compress=218Mo.

When compression size is interesting, the real database size is terrible.

Do you have any idea why this happen and how to investigate?

Hi @rems welcome to the community!

Do you have any log files that could take up that much space? Also, seems like you have a hypertable with compression enabled, could you share what’s the compression policy? (if any)

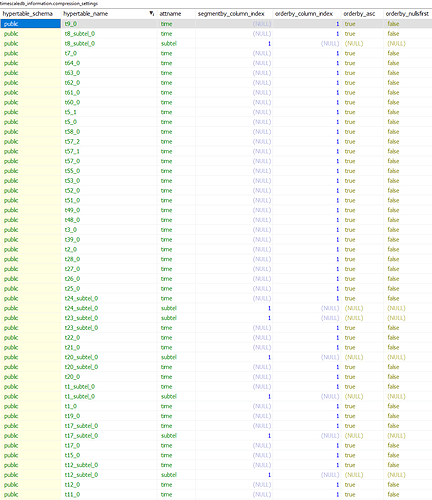

Compression policy:

I have changed some settings in my application witch seems to improve the disk space.

Before inserting old data (from old application backups) i was executing

SELECT alter_job(job_id, next_start => ‘infinity’) from timescaledb_information.job_stats WHERE hypertable_name LIKE ‘t%_%’

I changed for

SELECT alter_job(job_id, scheduled => false) from timescaledb_information.job_stats WHERE hypertable_name LIKE ‘t%_%’

and it looks better.

I checked on log file and i have 50Gb of log… i will investigate on this. thanks for the help