Interesting, you actually are approaching what was going to be my second potential solution… essentially storing an array and unnesting it at query time. I just didn’t have time yesterday to work that through some more (I was going to try and do it without first/last in some way).

Based on your two examples, I just wanted to add some clarifications (which you’ve probably already figured out!):

Sort order when using first/last for multiple columns

If you’re going to use first/last to return multiple values for the (hopefully) same point, just make sure you’re using the same sorting column each time. I noticed in your first response, the device_uuid call was sorted by the time column instead.

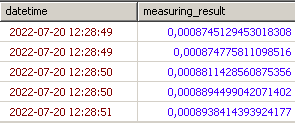

It’s also worth noting that if multiple measuring_result values qualify as “high” or “low”, you really need to sort by a secondary column. That’s not documented yet, but it is possible by providing a column set for the second parameter (eg. first(device_uuid,(measuring_result,time))). Since you want to get other columns that coincide with your high/low value, you need to make sure you’re breaking ties with a consistent ordering, otherwise, there’s no 100% guarantee that the datetime or device_uuid actually matches the value you received.

You’ll need to test this, and hopefully we’ll be able to provide a more tailored solution in the future, but you need to be aware of it. Therefore, in your second example, you’ll need to have the same multi-column ordering for each function call.

CAGG definition filtering

In your second response (using the arrays) I noticed that you filtered on device_uuid. Typically, you wouldn’t filter the CAGG to a specific device because it then limits that CAGG data to only ever be from that one device. If that’s your purpose, great! Just wanted to check.

Querying the data

Obviously, realize that if your goal is to select all of the “high” data together, unnesting will create multiple rows, one for “high” and one for “low”. If you have no identifier in the array, you might have to select things based on array position instead, eg SELECT bucket, symbol, measuring_result[1], device_uuid[1], datetime[1] from test to get all of the “low” data together.

Lots of food for thought there, but I’d be interested to know how you move forward and how it goes.

And again, your experience would make for great input to the toolkit team if you ever feel like creating an issue to describe the need!