Hi, first I must say I’m enjoying the recent blog posts on pgsql caching and memory management:

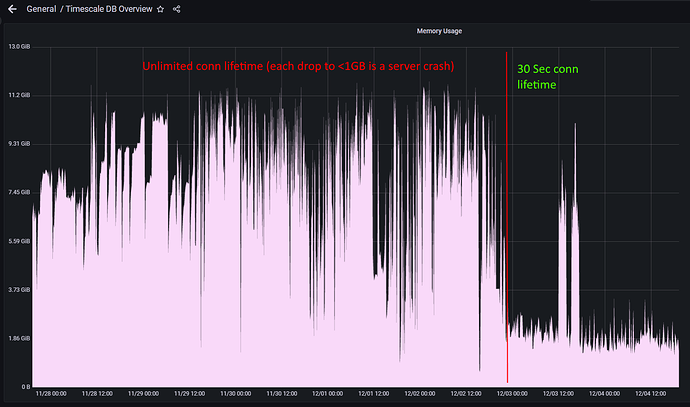

I’ve also been encountering these problems in our production environment. We’re using the timescale high availability image with Patroni in our pods on azure kubernetes. (single node per db instance) We’re still on pg 13 with timescale 2.6.1, but will upgrade to latest 2.8.1 soon. Any given pod can run reasonably well for hours or days but then a Postgres process gets terminated by the pod’s OOM killer. This in turn causes the db to go into a recovery mode. Which can take from a few seconds to hours. Our app can tolerate the former but the latter is bad news…

It seems like many articles talk about the importance of correctly configuring memory on the pgsql server. So far we have only ensured that our memory limits are appropriate for our chunk size and ensured timescale tune runs on pod start using the given memory and cpu limit values. (I’d made an assumption that the image was otherwise optimally configured for timescale)

I’ve been trying to test the benefits of changing vm overcommit and transparent huge pages as described in the blog. But I’m unable to apply the changes on my aks pod, I can’t even do it on my local docker container. Errors like:

/sys/kernel/mm/transparent_hugepage/enabled: Read-only file system

And similar for setting vm.overcommit_memory

So far I’m just beginning to suspect that these vm.* namespaced settings are restricted in containerized environments.

So how does one apply these settings in kubernetes or docker? In this presentation by Oleksii Kliukin: https://www.postgresql.eu/events/pgconfde2022/sessions/session/3692/slides/309/pgconf_de_2022_kliukin_talk.pdf

He shares that the timescale cloud folks are using these settings in their solution on kubernetes, so I believe it’s possible - I just can’t work out how  I’m also a bit interested in the possible use of ‘OOM guard’ although I’ll revisit this once I’ve got the basics under control.

I’m also a bit interested in the possible use of ‘OOM guard’ although I’ll revisit this once I’ve got the basics under control.

Anyway thanks in advance, I’m really looking forward to attending the pgconf in Berlin this week - I’ll be sure to come to the talks by the timescale team