Real-time Analytics in Postgres: Why It's Hard (and How to Solve It)

Start supercharging your PostgreSQL today.

Written by Carlota Soto, Mat Arye, and Doug Ortiz

Enabling users to access real-time data analytics is a key capability of many modern applications. Picture yourself using your favorite SaaS platform—there’s likely an intuitive dashboard presenting real-time data and historical insights. You can probably interact with the platform, creating customized reports, exploring detailed metrics, and visualizing trends spanning weeks or months.

You certainly wouldn’t want this platform to be slow as a user. This means that the database powering these products has to be fast at running queries over large volumes of data, including complex, analytical queries.

While PostgreSQL is the most loved database among developers today, it is not actually known for being fast at querying large volumes of data. But don’t worry: Postgres always has a tool in its toolbox. One of the best is materialized views.

What Are PostgreSQL Materialized Views?

Based on the materialization technique, PostgreSQL materialized views pre-compute commonly run queries and store the results as a table. Unlike standard PostgreSQL views, which run the underlying query every time the view is referenced, materialized views persist the result of the source query in the database. The great thing about this is that your database doesn’t have to execute the query every time you run it: the results are already accessible on disk—you’ll get the response to your query much faster.

This is an awesome way to optimize query responses for queries that are resource-intensive to compute. For example, queries that might involve the processing of large volumes of data, aggregations, or multiple joins.

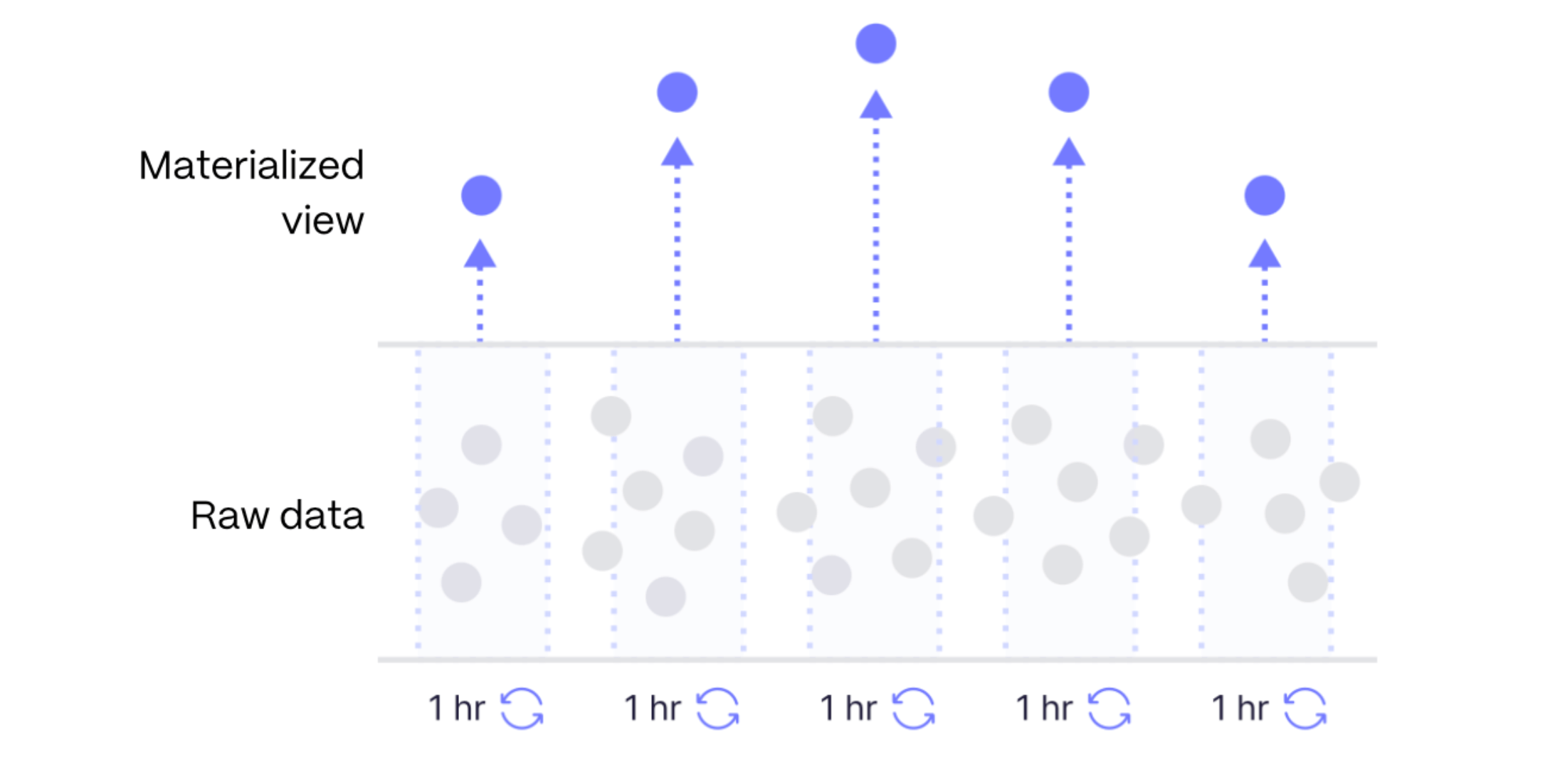

Materialized views effectively reduce the granularity of large datasets, keeping queries faster

Working with materialized views is super simple. To create a view, you would use CREATE MATERIALIZED VIEW statement and your query of choice. Once your materialized view is created, you can query it as a regular PostgreSQL table:

CREATE MATERIALIZED VIEW customer_orders AS

SELECT customer_id, COUNT(*) as total_orders

FROM orders

GROUP BY customer_id;-- Query the materialized view

SELECT * FROM customer_orders;This materialized view will quickly become stale until you refresh it: even if you’re adding new data to the base table (or updating or deleting data), the materialized view doesn’t include those changes automatically; it’s a snapshot at the time it was created. In order to update the materialized view, you need to run REFRESH MATERIALIZED VIEW.

REFRESH MATERIALIZED VIEW customer_orders;

This last point (how refreshes are handled) is the Achilles heel of materialized views, as we’ll discuss in the next section.

What About Real-Time Analytics? Limitations of PostgreSQL Materialized Views

As we were saying, PostgreSQL materialized views are a powerful tool for speeding up frequently run queries, especially if these queries go over large volumes of data. But materialized views come with one less-than-ideal aspect: to keep your materialized views up-to-date, they have to be refreshed. This single problem creates three important limitations:

Refreshes are inefficient and computationally expensive

When refreshing a materialized view, the query is recomputed over the entire dataset. Under the hood, when you run a refresh, the old materialized data is actually deleted and then substituted with new, re-materialized data. Implementing incremental refreshes (where only the changed data is updated) would make the aggregate process much more efficient, but it is difficult to implement correctly within a consistent relational database. Workarounds are sometimes possible with additional scripting, but they are far from straightforward, especially for complex queries or if late data is arriving.

Refreshes aren’t automatically run

As also mentioned earlier, materialized views won’t automatically incorporate the latest data. They have to be refreshed by running REFRESH MATERIALIZED VIEW. Running manual refreshes in a production setting is not feasible: a much more realistic setup would be to automate the refresh.

Unfortunately, materialized views don’t have built-in automatic refresh functionality, so creating an automatic refresh schedule for materialized views in PostgreSQL requires a scheduler of some sort. This can be handled in-database with an extension or out-of-database with a scheduler like cron. However, it’s managed because refreshes are expensive and take a long time. It is very easy to end up in a situation where you can’t refresh the view fast enough.

Materialized views don’t show up-to-date results

A consequence of the static nature of materialized views is that when queried, they’ll miss the data added or changed since the last refresh (even if that refresh happens on a schedule). If your scheduling window is set to an hour, then your aggregate will be up to an hour plus the actual time to do the update out of date. But many applications today imply a constant stream of data being ingested, and often, these applications have to offer up-to-date results to their users to ensure they’re retrieving accurate information when querying the view.

It is a pity that materialized views are constrained by these limitations. If you’re building a SaaS platform from a live dataset, with new data frequently coming in, should materialized views be completely discarded?

The answer is no. In Timescale, we built a solution that effectively enhances materialized views to make them more suitable for modern applications: continuous aggregates.

Meet Continuous Aggregates: Materialized Views With Automatic Refreshes for Real-Time Analytics

Imagine a world where materialized views are not just static snapshots but dynamically and efficiently updated. You would access the query performance improvement you seek without worrying about anything else. Well, it seems like we described Timescale’s continuous aggregates.

Continuous aggregates (available to all PostgreSQL databases via the TimescaleDB extension and in AWS via the Timescale platform) are materialized views enhanced with efficient, automated refresh capabilities and a real-time element. They look and feel almost exactly like materialized views but allow the following:

Automatic refreshes via a refresh policy

A more efficient refresh process: when a refresh runs, it will only touch the data that changed since the last refresh

Up-to-date results, expanding the use cases where materialized views can be leveraged (like real-time analytics, live dashboards, reporting, and others)

Making refreshes automatic and resource-efficient

Creating a continuous aggregate is very similar to creating a materialized view (and it can also be queried as a regular PostgreSQL table):

CREATE MATERIALIZED VIEW hourly_sales

WITH (timescaledb.continuous) AS

SELECT time_bucket(INTERVAL '1 hour', sale_time) as hour,

product_id,

SUM(units_sold) as total_units_sold

FROM sales_data

GROUP BY hour, product_id;But differently than materialized views, creating a refresh policy is straightforward. You can easily define the refresh interval within the database, ensuring that your continuous aggregate is automatically and periodically updated.

The example below sets up a refresh policy to update the continuous aggregate every 30 minutes. The end_offset parameter defines the time range of data to be refreshed and the schedule_interval sets how often the continuous aggregate will be refreshed:

-- Setting up a refresh policy

SELECT add_continuous_aggregate_policy('hourly_sales',

end_offset => INTERVAL '1 minute',

schedule_interval => INTERVAL '30 minutes');When this refresh policy kicks in, the process will be much more efficient than if we were using a plain materialized view. Unlike running REFRESH MATERIALIZED VIEW, when a continuous aggregate is refreshed, Timescale doesn’t drop all the old data and recompute the aggregate against it: the engine just runs the query against the most recent refresh period (e.g., 30 minutes) and adds it to the materialization.

Similarly, UPDATEs and DELETEs performed during this last period are identified, recomputing the chunk (partition) that involves them. (Continuous aggregates built on Timescale’s hypertables, which are automatically partitioned PostgreSQL tables. This is a massive advantage, allowing the engine to recompute only specific partitions vs. the entire table when the data has changed.)

Showing up-to-date results for real-time analytics

But, how do continuous aggregates solve the problem of viewing up-to-date results? What happens if new data has been added after the last refresh, and I query the continuous aggregate?

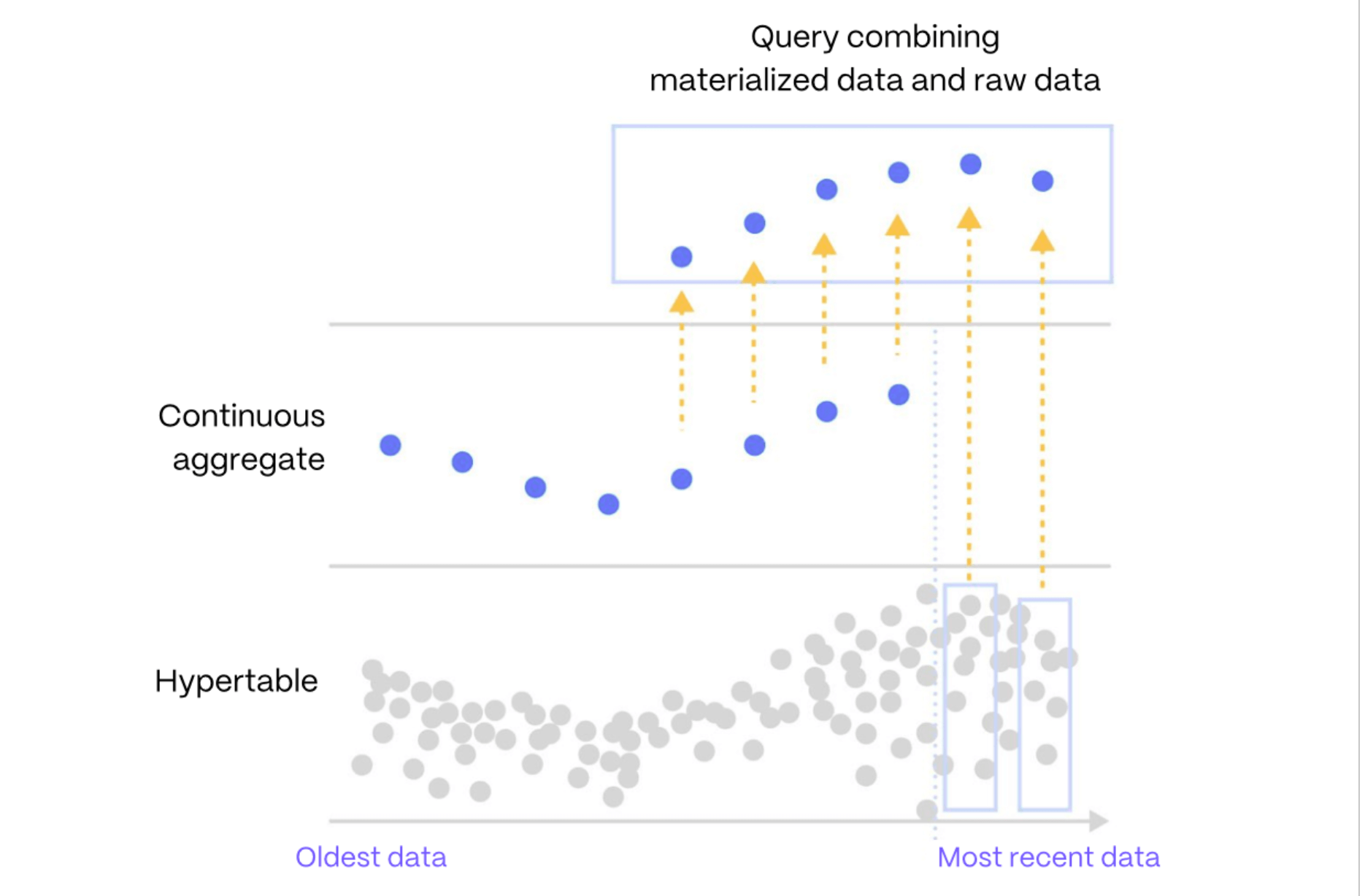

To allow this functionality, we added real-time aggregation functionality to continuous aggregates. When real-time aggregation is enabled, and you query your continuous aggregate, the result you’ll see will combine two parts:

The materialized data in the underlying materialized view, which has been updated in the last refresh.

The most recent, not-yet-materialized raw data, which still lives exclusively in your base table (or hypertable, to be exact).

This functionality transforms materialized views from static snapshots into dynamic entities, ensuring that the stored data is not just a historical reflection but an up-to-date representation of the underlying datasets.

When real-time aggregation is enabled, continuous aggregates show you up-to-date results by combining your pre-calculated data with your newer, not yet materialized "raw" data.

Using Continuous Aggregates: Example

Even if this all sounds good, it'll (hopefully) come together a lot better with an example.

Imagine a platform used by transportation agencies and ride-sharing companies. This platform contains a dashboard in which companies can see an overview of the status of their fleet, including a table with the latest status of key metrics and two visualizations showing how the metrics are doing that particular day and within the context of the week.

To power this application, we would first have a hypertable in which the data about the rides is constantly inserted. The hypertable could look something like this:

CREATE TABLE rides (

ride_id SERIAL PRIMARY KEY,

vehicle_id INT,

start_time TIMESTAMPTZ NOT NULL,

end_time TIMESTAMPTZ NOT NULL,

distance FLOAT NOT NULL,

price_paid FLOAT NOT NULL

);

SELECT create_hypertable('rides', 'start_time');

Hypertables are very fast and very scalable—this table will remain performant even when it has billions of rows.

To power the table providing a live overview, we would use a continuous aggregate to bucket the data by 30 minutes. This would keep the process fast and responsive:

-- Create continuous aggregate for live overview

CREATE MATERIALIZED VIEW live_dashboard

WITH (timescaledb.continuous, timescaledb.materialized_only=false)) AS

SELECT

vehicle_id,

time_bucket(INTERVAL '30 minute', start_time) as minute,

COUNT(ride_id) as number_of_rides,

AVG(price_paid) as average_price

FROM rides

GROUP BY vehicle_id, minute;-- Set up a refresh policy

SELECT add_continuous_aggregate_policy('live_dashboard',

end_offset => INTERVAL '10 minutes',

schedule_interval => INTERVAL ‘15 minute');

In the previous code, the end_offsetparameter ensures that the aggregate does not immediately attempt to refresh the very latest data, allowing some buffer time to accommodate any lags in data arrival. Setting end_offset to 10 minutes means the aggregate will refresh data that is at least 10 minutes old, ensuring it doesn’t miss updates due to minor delays in data inflow. In a real-world use case, you would adjust this value based on the average delay you observe in your data pipeline.

To power the visualization offering the daily view, we would create a second continuous aggregate. In this chart, the data is being displayed by hour, so we don’t need a per-minute granularity as the previous one:

-- Create continuous aggregate for daily overview

CREATE MATERIALIZED VIEW hourly_metrics

WITH (timescaledb.continuous, timescaledb.materialized_only=false) AS

SELECT

vehicle_id,

time_bucket(INTERVAL '1 hour', start_time) as hour,

COUNT(ride_id) as number_of_rides,

SUM(price_paid) as total_revenue

FROM rides

WHERE start_time > NOW() - INTERVAL '1 day'

GROUP BY vehicle_id, hour;-- Define refresh policy

SELECT add_continuous_aggregate_policy('hourly_metrics',

end_offset => INTERVAL '10 minutes',

schedule_interval => INTERVAL `1 hour`);

Finally, to power the chart offering the weekly view, we would create one more continuous aggregate, this time aggregating the data by day:

-- Create continuous aggregate to power chart with weekly overview

CREATE MATERIALIZED VIEW daily_metrics

WITH (timescaledb.continuous, timescaledb.materialized_only=false) AS

SELECT

vehicle_id,

time_bucket(INTERVAL '1 day', start_time) as day,

COUNT(ride_id) as number_of_rides,

SUM(price_paid) as total_revenue

FROM rides

WHERE start_time > NOW() - INTERVAL '1 week'

GROUP BY vehicle_id, day;-- Define refresh policy

SELECT add_continuous_aggregate_policy('daily_metrics',

end_offset => INTERVAL '10 minutes',

schedule_interval => INTERVAL '1 day);P.S. To make the experience of defining continuous aggregates even more efficient, Timescale introduced hierarchical continuous aggregates in TimescaleDB 2.9. Once you get familiar with continuous aggregates, you can start creating them on top of other continuous aggregates—e.g., in the previous example, you could also define the hourly aggregates on top of the by-minute aggregate.

Why Attempting to Implement a Continuous Aggregate With Triggers Is Not A Good Idea

While do-it-yourself solutions are very appealing to a majority of people, when it comes to implementing a continuous aggregate by utilizing PostgreSQL triggers, a variety of unforeseen and unaccounted challenges will quickly come to the surface.

A few that quickly come to mind are performance overhead, concurrency issues, maintenance complexity, trigger cascading, testing and debugging, resource consumption, and query performance. The previous list is not exhaustive by any means but should give you an idea of what lies ahead should you decide to implement a real-time analytics solution with PostgreSQL triggers.

Let’s delve into each one of the previously mentioned challenges.

Performance overhead: Triggers, by default, incur additional processing time for every data modification operation; multiple triggers will increase processing time and resource consumption.

Concurrency issues: Triggers operate within the same transaction context as the DML (Data Manipulation Language) operations they have been associated with. This alone can lead to deadlocks and contention, that is, without taking into account multiple transactions attempting to perform updates on the same data concurrently.

Data consistency: Maintaining data consistency between the source and aggregate tables is key. Should triggers fail or encounter errors during the update or aggregation process, this will result in inconsistencies, which will be difficult to track and debug.

Maintenance complexity: Trigger management increases the complexity of the database schema and maintenance tasks, leading to ensuring that the triggers are created, maintained, and updated properly, efficiently, and reliably.

Trigger cascading: This action usually occurs when triggers “trigger” other triggers, resulting in performance and concurrency issues.

Testing and debugging: Thorough testing and debugging will be not only necessary but mandatory. This process will not be able to cover all scenarios, which means that unexpected and edge cases will arise.

Resource consumption: Database administrators will require heavy involvement since the monitoring of resources, CPU, memory, and disk space will be necessary.

Query performance: The design and indexing of aggregate tables will call for careful consideration with the goal of ensuring efficient query executions.

In the end, a somewhat similar comparison question could be: Should I buy a car, or should I build one?

Does that sound like a good idea?

Conclusion

Even if PostgreSQL was not originally built for applications that need to process large live datasets, guess what—these types of workloads are now everywhere. However, PostgreSQL comes with features that help with this task. Materialized views are among the most powerful, as they allow pre-computing query results and storing them on disk for fast retrieval.

However, materialized views have three important limitations. First, triggering refreshes is very computationally inefficient. Second, even setting up these automatic refreshes is not a seamless process. Third, materialized views don’t show up-to-date results, as they exclude the data that has been added or modified since the last refresh.

These limitations make materialized views an unpractical solution for many modern applications. To solve this, we built continuous aggregates. These are PostgreSQL materialized views in which you can easily define a refresh policy, so the refreshes happen automatically. Those refreshes are also incremental and, therefore, much more efficient. Lastly, continuous aggregates allow you to combine the data that has been materialized with the raw data added and modified since the last refresh, ensuring you will only get up-to-date results.

"A couple of times I’ve had to explain to my colleagues, when they are asking about the database capabilities to handle live events, “Don’t worry about it, Timescale just does it.” It’s good to know that when scaling with Continuous Aggregates, it will only take an hour to set up and we don’t have to worry about it again."

Björn Olafur Johannsson, Software Engineer, AVO

If you’re running PostgreSQL on your hardware, you can access continuous aggregates by installing the TimescaleDB extension. If you’re in AWS, check out the Timescale platform. The first 30 days are free.